Master projects

Here you can find all our available master projects.

Open Projects (126)

-

(PwC) LLMs for Data Analysis Pipelines

LLM has the potential to make data more accessible to a non-technical audience through prompt-based analytics. It also has the potential to help make engineering teams more efficient by quickly getting a first draft of a data pipeline.Both of these applications hinge on appropriate …

More info Bart Engelen

Bart Engelen

-

(PwC) Question bank generator for Applied GenAI

(PwC) Question bank generator for Applied GenAI PwC has developed several GenAI applications using models that have been trained on a large corpus of text and can retrieve relevant parts of that corpus when prompted by a user's questions (known as RAG-LLMs). Though many …

More info Bart Engelen

Bart Engelen

-

(PwC) Enhancing Anomaly Detection: Integrating User Explainability

PwC developed an unsupervised Transformer-based anomaly detection tool to enhance insights into machine functionality in factories by analyzing machinery timeseries sensor data. However, the current solution lacks explainability for why certain time windows are flagged as anomalous. Root cause algorithms, such as Bayesian inference, …

More info Bart Engelen

Bart Engelen

-

(PwC) Write your thesis at PwC Advisory Data Analytics

Do you want to write your master's thesis about a Data & AI related topic on real-world client cases? We offer you the opportunity to write your thesis within PwC's Data Analytics Advisory team. This is a multidisciplinary team that uses its analytical skills …

More info Bart Engelen

Bart Engelen

-

Synopses meet machine learning

Training ML models over big data is a time-consuming and energy-hungry process. Furthermore it requires full access over the data, which is challenging in many use cases, due to the size of the data. The problem is particularly challenging when the data is read …

Odysseas Papapetrou

More info

Odysseas Papapetrou

More info Mykola Pechenizkiy

Mykola Pechenizkiy

-

Enhancing Real-World Imitation Learning with Reinforcement Learning

This TU/e master project is setup in collaboration with a robotics start-up in Eindhoven.Company OverviewTeleOperation Services is an innovative company based in Woensel-Noord, Eindhoven. Our cutting-edge AI-driven system empowers robotic arms to imitate tasks and perform them independently with human-like finesse and speed. Through …

Bram Grooten

More info

Bram Grooten

More info Thiago Simão

Thiago Simão

-

Reinforcement Learning for Efficient Causal Discovery

Understanding causal relationships within data is essential across fields such as healthcare, economics, and social sciences, where knowing "what causes what" guides decision-making and policy. Causal discovery, the process of identifying these relationships and structuring them in causal graphs, remains challenging, especially in complex, …

Devendra Dhami

More info

Devendra Dhami

More info Maryam Tavakol

Maryam Tavakol

-

Causal Discovery for Offline Model-Based Reinforcement Learning

Reinforcement Learning (RL) has proven effective in a variety of complex decision-making tasks. However, traditional RL requires extensive online interactions, making it costly and, in some domains, impractical due to constraints on safety, time, or resource availability. Offline RL, which relies solely on pre-collected …

Maryam Tavakol

More info

Maryam Tavakol

More info Devendra Dhami

Devendra Dhami

-

Context-Aware Model-Based Offline Reinforcement Learning

Offline Reinforcement Learning (RL) deals with the problems where simulation or online interaction is impractical, costly, and/or dangerous, allowing to automate a wide range of applications from healthcare and education to finance and robotics. However, learning new policies from offline data suffers from distributional …

More info Maryam Tavakol

Maryam Tavakol

-

Federated Learning for detecting Lung Disease in Developing Countries

BackgroundDelft Imaging develops mobile X-Ray machines that allow screening in low resource settings, such as developing countries or remote locations. Due to the lack of qualified professionals in these settings, they use computer vision models to automate screening of patients for diseases such as …

Tim d'Hondt

More info

Tim d'Hondt

More info Mykola Pechenizkiy

Mykola Pechenizkiy

-

Safe Contrastive Imitation Learning

MotivationIn safety-critical domains such as autonomous driving, healthcare robotics, and industrial automation, it is imperative for autonomous agents to not only perform tasks efficiently but also safely. Traditional imitation learning enables agents to learn behaviors by mimicking expert demonstrations. However, these methods often overlook …

Tristan Tomilin

More info

Tristan Tomilin

More info Thiago Simão

Thiago Simão

-

Empirical study of the social life of Knowledge graphs

Company: Marel Location: Boxmeer Background Marel, a global leader in the food processing industry, specializes in designing and manufacturing advanced machinery for processing poultry, meat, and fish. Effective knowledge sharing among engineers at Marel is crucial to support business operations. However, not all knowledge …

George Fletcher

More info

George Fletcher

More info Zeno van Cauter

Zeno van Cauter

-

Empirical study of knowledge evolution through Knowledge Graph changes

Company: Marel Location: Boxmeer Background Marel, a global leader in the food processing industry, specializes in designing and manufacturing advanced machinery for processing poultry, meat, and fish. Effective knowledge sharing among engineers at Marel is important for sustaining business operations.Problem description In this project …

George Fletcher

More info

George Fletcher

More info Sepehr Sadoughi

Sepehr Sadoughi

-

Part replacement identification using Knowledge Graphs

(This project is also available as an internship)Company: Marel Location: Boxmeer BackgroundIt is important for industrial equipment developers to provide accurate part replacements to their customers. Parts can wear over time or break and having suitable replacements is a dynamic process based on availability, …

Mykola Pechenizkiy

More info

Mykola Pechenizkiy

More info Zeno van Cauter

Zeno van Cauter

-

Maintenance and Real-Time Updating of Deployed Knowledge Graphs

(This project is also available as an internship)Company: Marel Location: BoxmeerBackgroundKnowledge Graphs have emerged as a powerful tool for representing vast amounts of interconnected data. By structuring data in a graph format, enterprises can uncover relationships and insights that are often hidden in traditional …

Nick Yakovets

More info

Nick Yakovets

More info Zeno van Cauter

Zeno van Cauter

-

Context-aware knowledge retrieval from KGs for technical support thinking assistant

Background: Knowledge Graphs (KGs) are structured representations of knowledge, that organize information in a graph-based format, where entities (nodes) and the relationships between them (edges) represent facts in an interconnected network. This graph-based structure enables encoding complex interrelationships and semantic information, making it an …

Nick Yakovets

More info

Nick Yakovets

More info Sepehr Sadoughi

Sepehr Sadoughi

-

Pattern discovery to improve overlay control loop by using Bayesian inference tooling

This assignment aims to detect and quantify persistent overlay improvements by investigating a larger data set systematically.It will provide you with insights into the overlay performance of ASML lithography machines. You will also learn how ASML maintains machine performance via drift control strategy. As …

Erik Quaeghebeur

More infoSRSejong Park, Hamideh Rostami

Erik Quaeghebeur

More infoSRSejong Park, Hamideh Rostami -

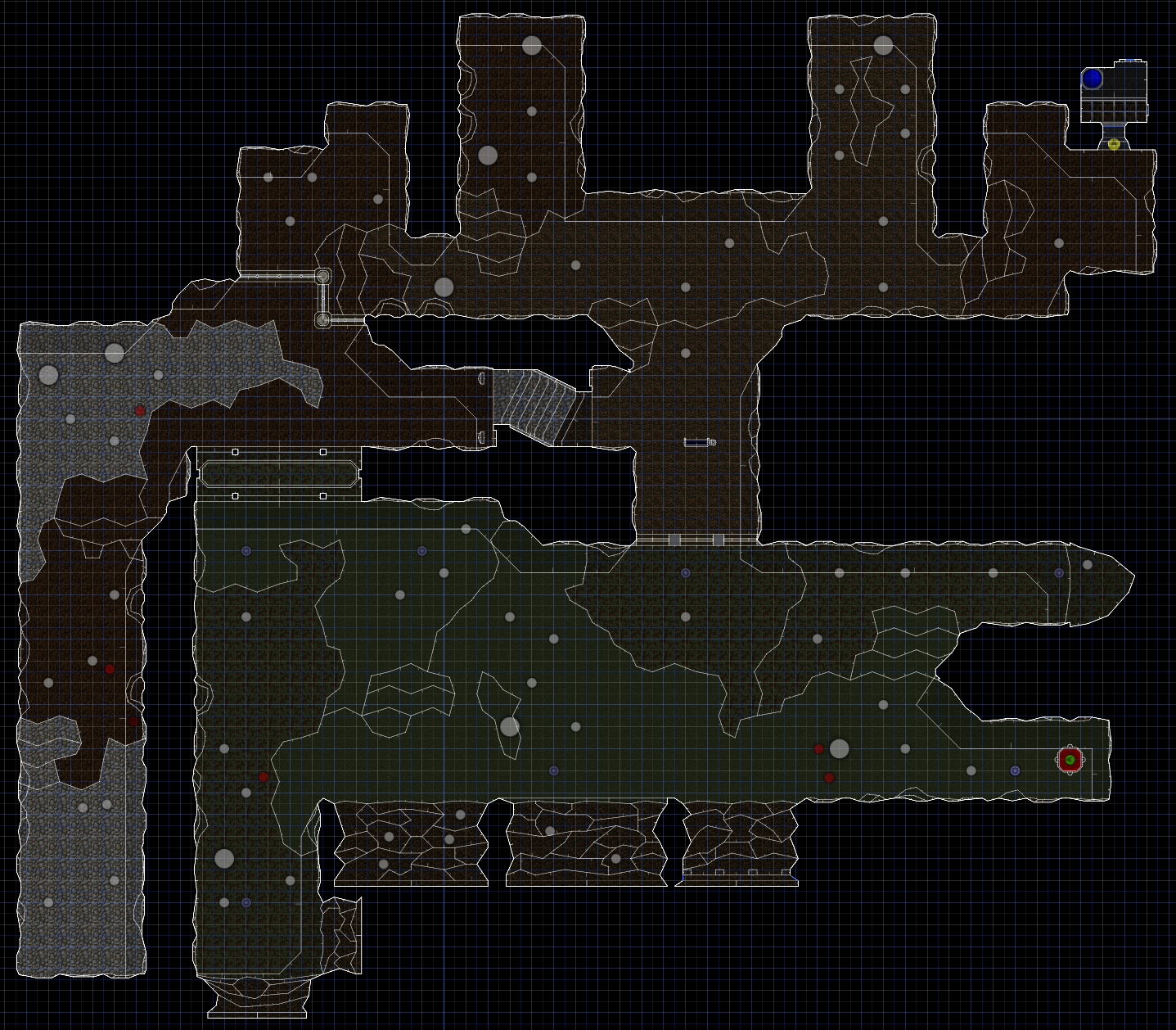

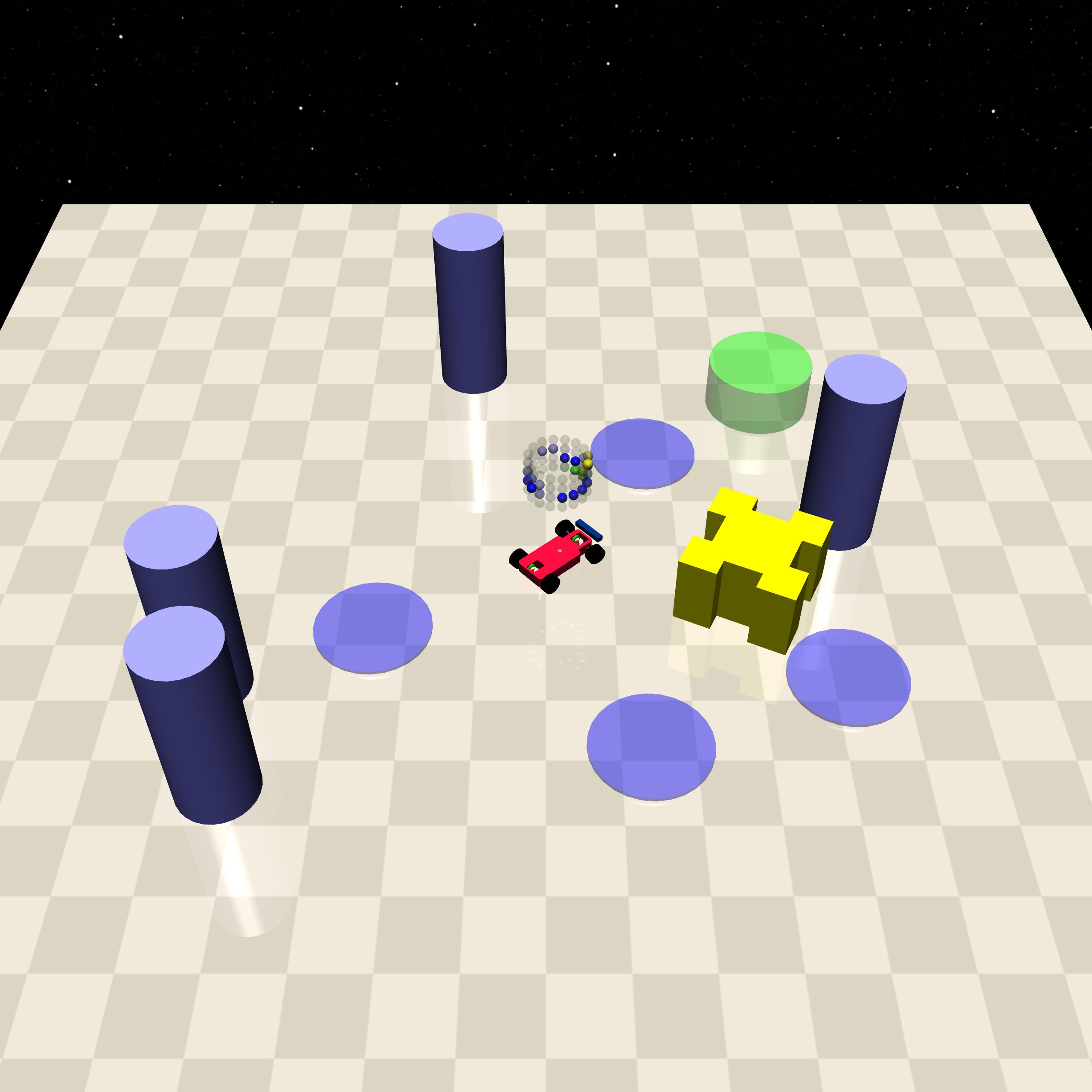

Procedural 3D Environment Generation for Image-Based Reinforcement Learning

As autonomous systems evolve, static simulation environments for training reinforcement learning agents increasingly fail to prepare algorithms for real-world variability. Procedural content generation (PCG) [5] in 3D environments offers a low-cost solution to automatically creating a near-infinite variety of dynamic training scenarios. This has the …

Tristan Tomilin

More info

Tristan Tomilin

More info Meng Fang

Meng Fang

-

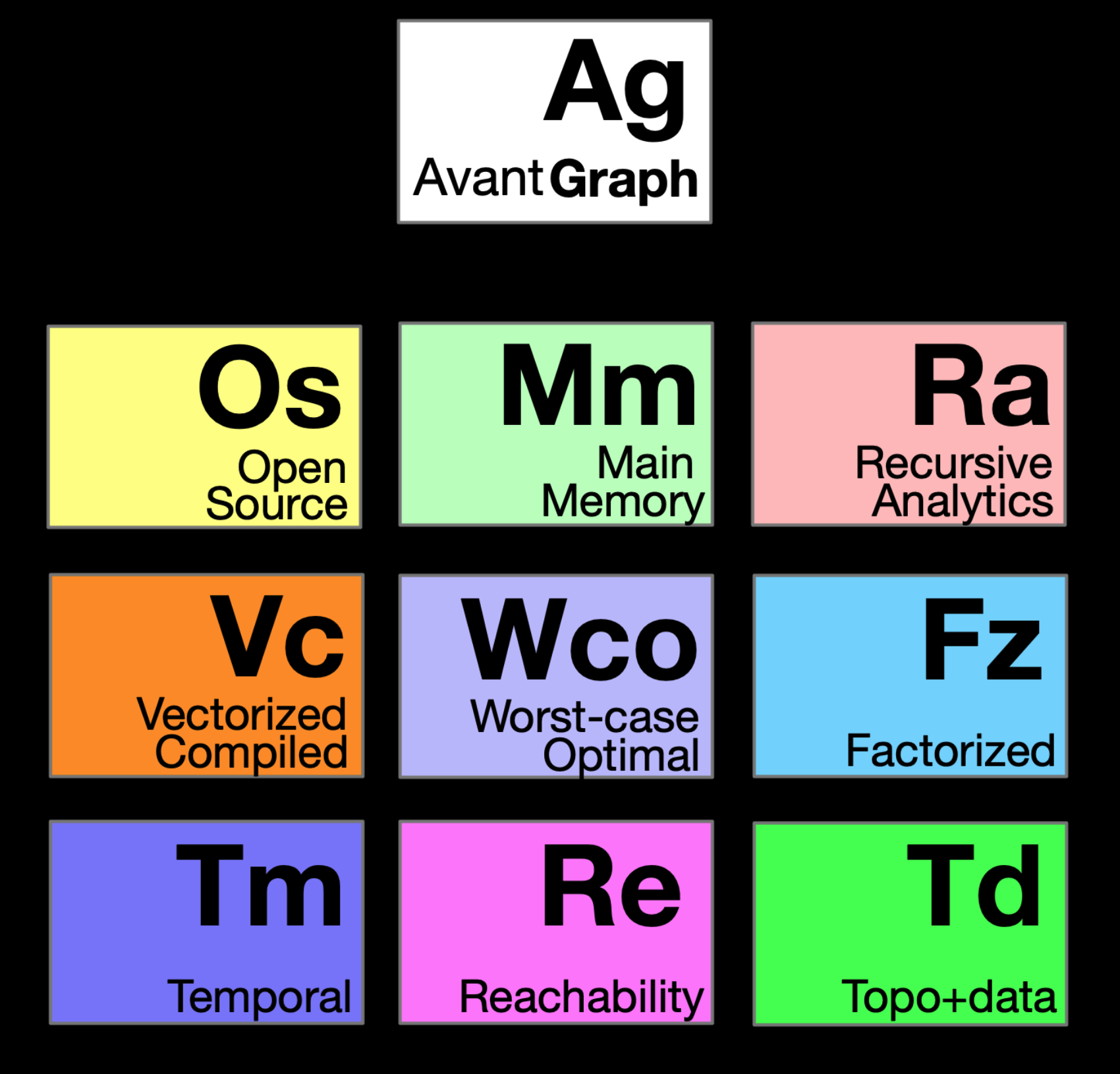

Implementing the Graph Pattern Matching Language (GPML) Fragment for GQL on AvantGraph

Graph databases have emerged as a powerful contender to traditional relational databases, especially in areas where complex relationships and interconnections are required, such as social networks and knowledge graphs. This has led to the development of various query languages to interact with graph databases, …

Nick Yakovets

More info

Nick Yakovets

More info Sepehr Sadoughi

Sepehr Sadoughi

-

A Matrix Factorization approach to Exceptional Model Mining

Exceptional Model Mining aims to identify subgroups in the dataset that behave somehow exceptionally. It differs from a clustering approach since subgroups may overlap; not all data points are assigned to a cluster. However, consequently, the list of subgroups often contains many similar, redundant …

Rianne Schouten

More info

Rianne Schouten

More info Sibylle Hess

Sibylle Hess

-

Exceptional Model Mining with Missing Data

In this project, we develop an instance of Exceptional Model Mining using the HBSC dataset (together with UU and Trimbos Institute). The HBSC study is repeated every four years among Dutch adolescents and among others, collects information about their drug and alcohol use. We …

Rianne Schouten

More info

Rianne Schouten

More info Wouter Duivesteijn

Wouter Duivesteijn

-

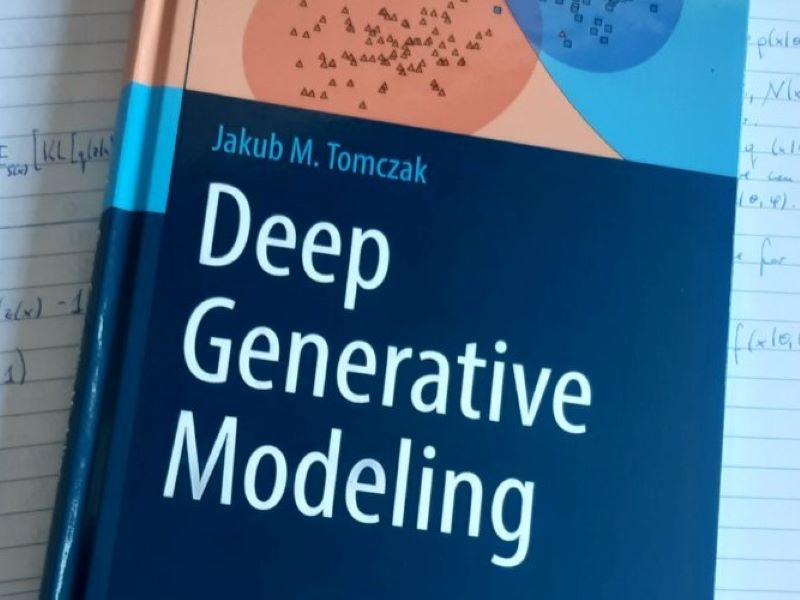

Generating synthetic data with generative modeling

In this project, we aim to generate a synthetic dataset that has similar properties as an existing, longitudinal, medical dataset. In particular, we work together with the Dutch south west Psoriatic Arthritis Registry (DEPAR) study (https://ciceroreumatologie.nl/depar), situated at Erasmus MC. Generating a synthetic version …

Rianne Schouten

More info

Rianne Schouten

More info Jakub Tomczak

Jakub Tomczak

-

Analyzing mathematical learning abilities in children using re-description mining for hierarchical data

In this project, we analyze learning behavior in young children. We work with data collected by the Turku Research Institute for Learning Analytics, where children perform a variety of computer assisted tasks such as comparing numbers and simple calculation tasks. Re-description mining is a …

Rianne Schouten

More info

Rianne Schouten

More info Mykola Pechenizkiy

Mykola Pechenizkiy

-

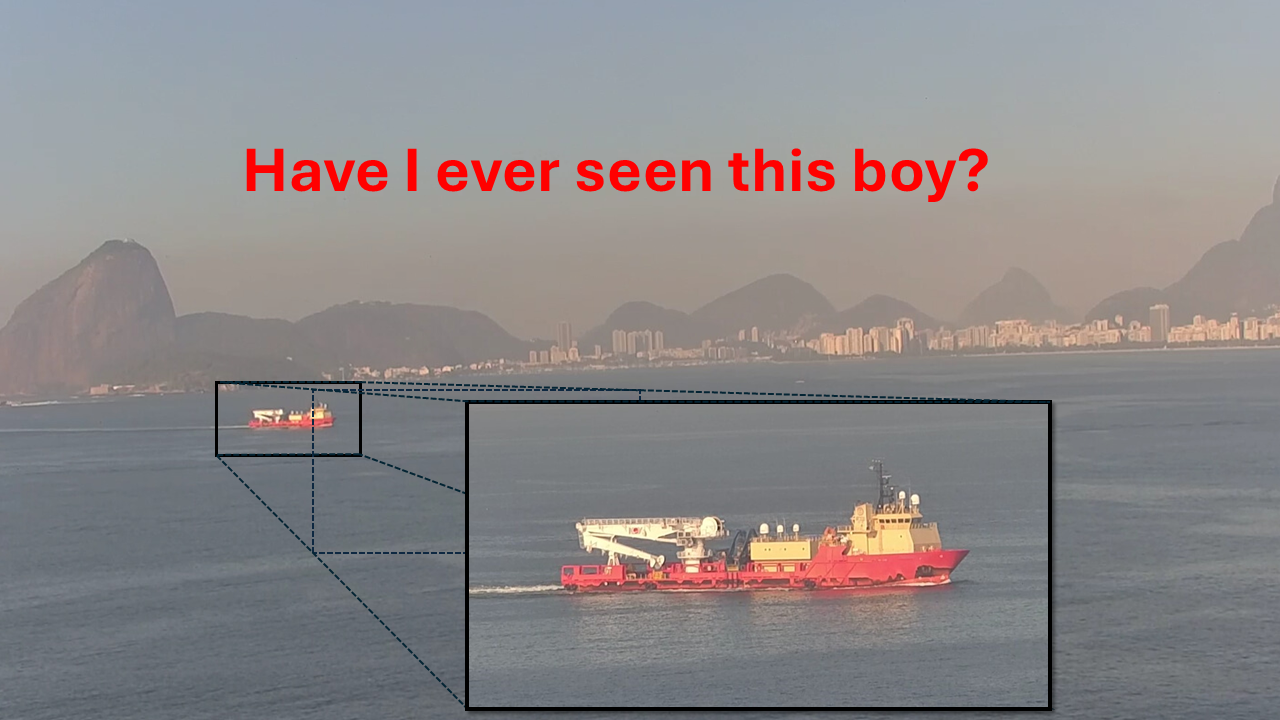

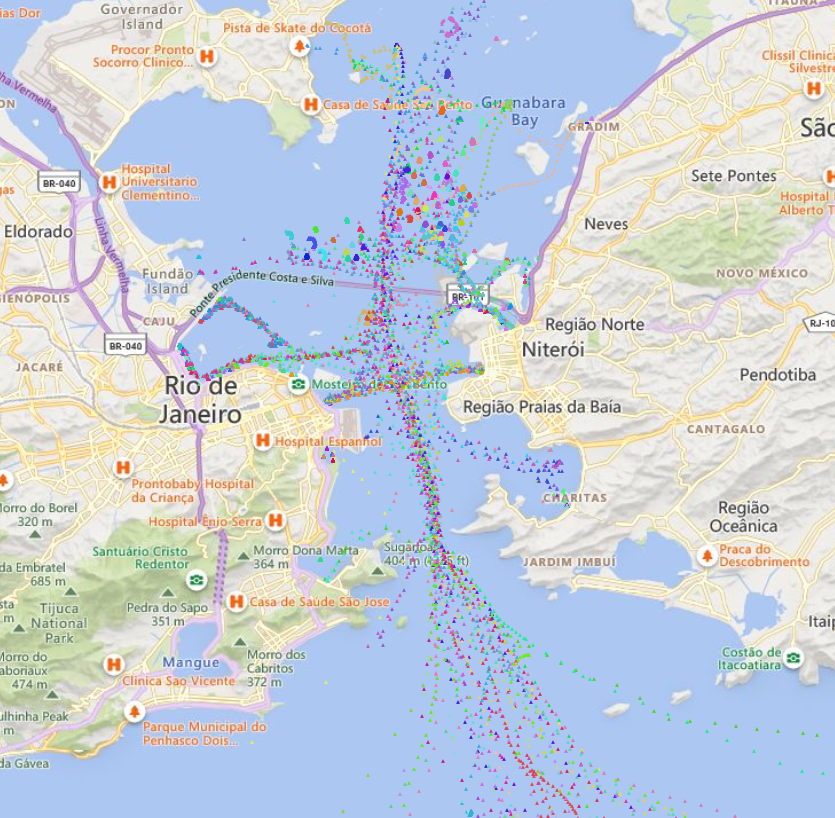

Alternative latent space models for vessel re-identification

Coastal surveillance cameras are often used to detect (distinguish from the background) and recognize (as belonging to a class) non-cooperative vessels, i.e. vessels not reporting their position and identity using an AIS [1] transponder through a TDMA network such that nearby AIS base stations …

Mykola Pechenizkiy

More info

Mykola Pechenizkiy

More info Stiven Schwanz Dias

Stiven Schwanz Dias

-

Vision-centric image tokenization in the generative transformer era

Generative autoregressive next token prediction has shown impressive success in LLMs. Several works have attempted to extend the success of LLMs to vision-language tasks with VLMs. While a VLM can be designed specifically for image-to-text tasks like visual question answering, many works also attempt …

Bahram Zonooz

More info

Bahram Zonooz

More infoElahe Arani

-

Maritime traffic anomaly detection

Coastal surveillance systems rely on multiple sensors to perform object assessment [1], i.e., to detect and track the sequence of vessels' states including their position and velocity (where are the vessels at a given timestamp?). In general, surface radars are employed as a primary …

Mykola Pechenizkiy

More info

Mykola Pechenizkiy

More info Stiven Schwanz Dias

Stiven Schwanz Dias

-

Leveraging Language Semantics for Enhanced Understanding and Generalization

The field of artificial intelligence has seen unprecedented growth in recent years, particularly with the advent of foundation models and large language models (LLMs). These models have showcased remarkable capabilities across a broad spectrum of applications, including natural language processing and multimodal tasks. Traditionally, …

Bahram Zonooz

More info

Bahram Zonooz

More infoElahe Arani

-

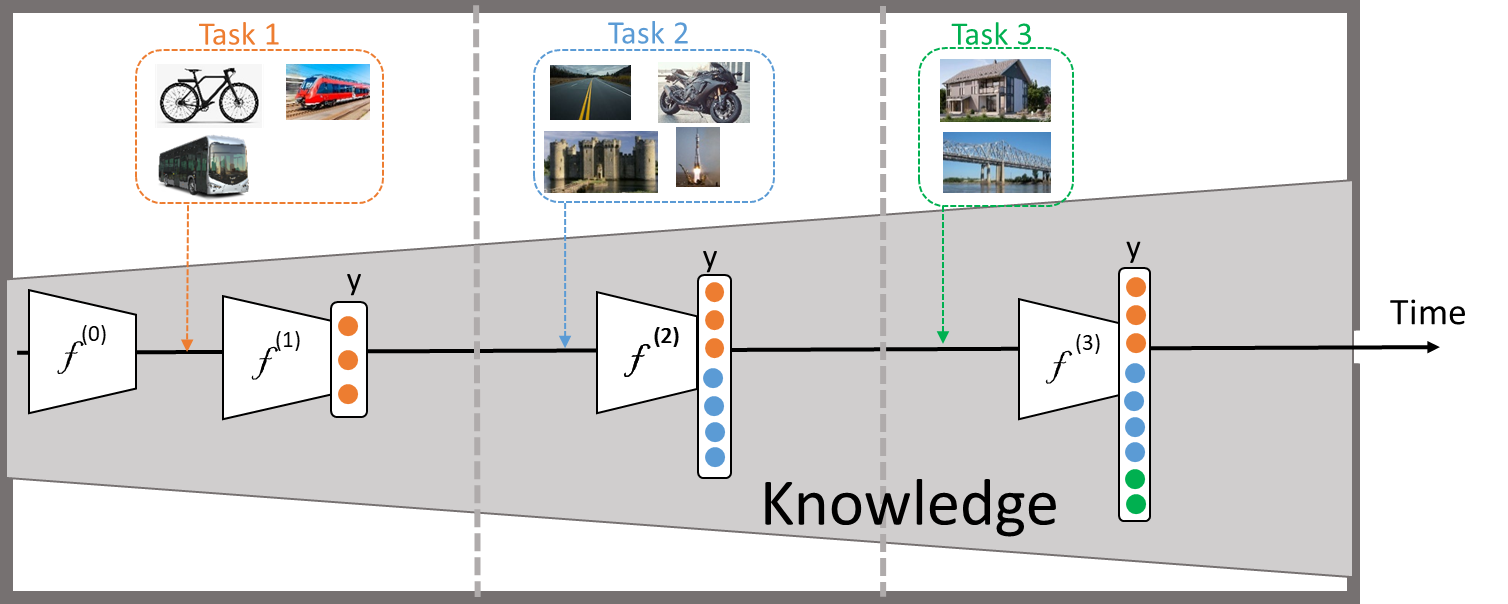

Architectural Analysis of Vision Transformers in Continual Learning

Deep neural networks (DNN) deployed in the real world are frequently exposed to non-stationary data distributions and required to sequentially learn multiple tasks. This requires that DNNs acquire new knowledge while retaining previously obtained knowledge and this is imperative in applications like autonomous driving …

Bahram Zonooz

More info

Bahram Zonooz

More infoElahe Arani

-

True continual learners in the wild: CL beyond artificial constraints / datasets

Continual Learning (CL) is a learning paradigm in which computational systems progressively acquire multiple tasks as new data becomes available over time. An effective CL system must find a balance between being adaptable to integrate new information and maintaining stability to prevent disruption of …

Bahram Zonooz

More info

Bahram Zonooz

More infoElahe Arani

-

Exploring sparsity in lifelong learning

In the dynamic world, deep neural networks (DNNs) must continually adapt to new data and environments. Unlike humans, who can learn continually without forgetting past knowledge, DNNs often suffer from catastrophic forgetting when exposed to new data, causing them to lose previously acquired information. …

Bahram Zonooz

More info

Bahram Zonooz

More infoElahe Arani

-

Image representation learning in autoregressive transformers

With the recent success of LLMs, and the strong potential of multi-modal learning from both text and vision, several works have framed images as sequences to conform with generative sequence-to-sequence encoder-decoder or decoder based transformers [1]. Such formulations present advantages such as unified architectures …

Bahram Zonooz

More info

Bahram Zonooz

More infoElahe Arani

-

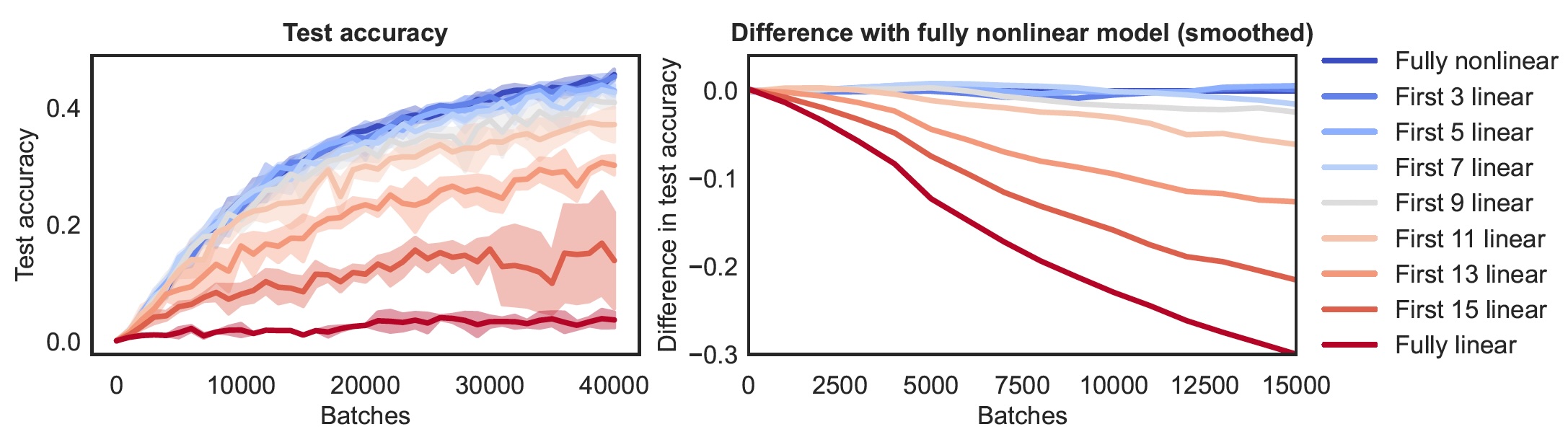

Understanding deep learning – exploring the development of complexity in neural networks over depth and time

Introduction: When we train deep, nonlinear neural networks, we often assume that the applied transformations at every layer are effectively nonlinear. Earlier work (Kalimeris et al., 2019)has shown that in the beginning of training, the complete function that deep, nonlinear networks implement is close …

Hannah Pinson

More info

Hannah Pinson

More info Aurélien Boland

Aurélien Boland

-

Examples of missing not at random (MNAR) data that are difficult for classifiers

It often occurs in datasets that there is missing data. A good introduction can be found here: https://stefvanbuuren.name/fimd/.This missingness might be "completely at random" (MCAR). This occurs when the probability of being missing is the same for all cases. An example of MCAR data …

More info Arthur van Camp

Arthur van Camp

-

Implementation of inference algorithms for the imprecise Plackett–Luce model

The Plackett–Luce model is a popular parametric probabilistic model to define distributions between rankings of objects, modelling for instance observed preferences of users or ranked performances of algorithms. Since such observations may be scarce (users may provide partial preferences, or not all algorithms are …

More info Arthur van Camp

Arthur van Camp

-

Breeding Program Optimization via Offline Reinforcement Learning

Crop breeding programs aim to develop new cultivars with desirable traits through controlled mating within a population, enhancing agricultural productivity while reducing land use, greenhouse gas emissions, and water consumption. However, these programs face challenges like long turnover times, complex decision-making, long-term goals, and climate …

Maryam Tavakol

More infoIAIoannis Athanasiadis

Maryam Tavakol

More infoIAIoannis Athanasiadis -

Uncertainty Estimation in Model-Based Offline Reinforcement Learning using Random Networks

Offline Reinforcement Learning (RL) deals with the problems where simulation or online interaction is impractical, costly, and/or dangerous, allowing to automate a wide range of applications from healthcare and education to finance and robotics. However, learning new policies from offline data suffers from distributional …

More info Maryam Tavakol

Maryam Tavakol

-

Long-term Fairness with Offline Reinforcement Learning

One of the main concerns in the recent AI research is that most data-driven approaches preserve the bias or unfairness available in the collected (offline) data in the resulting models, which could lead to harmful social and ethical effects in the society. Fairness-aware machine learning has …

More info Maryam Tavakol

Maryam Tavakol

-

Object-relational mapping for key-value databases

Object-relational mappers (ORM) like Django allow one to interact with a database in an object-oriented manner, and provide constructs for easy deployment of web-based applications that depend on a database. The underlying database of an ORM is typically a SQL database. It is unclear …

More info Robert Brijder

Robert Brijder

-

Efficient database infrastructure for libraries

Database management systems for libraries (as in, institutions for lending books) need to satisfy a number of specific needs, in particular regarding the types of queries that need to be supported and regarding performance of the queries that are most often executed. In this …

More info Robert Brijder

Robert Brijder

-

Show me the path: do people only care about paths?

Paths in graphs are natural, arising in domains as diverse as social networks (e.g., which people are in the same community?), communication networks (e.g., how does information spread via SMS messages?), and literary networks (e.g., which scientific papers are the most influential, in terms …

George Fletcher

More infoSBSourav Bhowmick (NTU Singapore)

George Fletcher

More infoSBSourav Bhowmick (NTU Singapore) -

AI for 3D Concrete Printing

Designing 3D printable materials has been, so far, a trial-and-error process dependent on human knowledge and effort; hence time-consuming and wasteful. To predict certain properties of 3DCP, material scientists have used modelling and simulations for decades. While helpful in many ways, models mostly require …

Mykola Pechenizkiy

More infoSBSandra Lucas and Önder Babur

Mykola Pechenizkiy

More infoSBSandra Lucas and Önder Babur -

Continual Reinforcement Learning with Language Instructions

Continual reinforcement learning (CRL) stands as a pivotal paradigm in the AI landscape, fostering the development of adaptive and lifelong learning agents. This project delves into the intersection of CRL and natural language processing within the immersive realm of 3D simulation environments. The integration …

Tristan Tomilin

More info

Tristan Tomilin

More info Meng Fang

Meng Fang

-

AI for histopathology of melanoma

BackgroundMelanoma is a form of skin cancer that originates in melanin-producing cells known as melanocytes. While other skin cancer types occur more frequently, melanoma is most dangerous due to the high likelihood of metastasis if not treated early. The incidence rate of melanoma has …

Sibylle Hess

More infoMVMitko Veta

Sibylle Hess

More infoMVMitko Veta -

Counterfactual explanations

I plan to offer a few assignments on counterfactual explanationsCounterfactual explanations on evolving dataFeasibility, actionability and personalization of counterfactual explanationsCounterfactual explanations for spotting unwanted biased in predictive model behaviourValue alignment for counterfactual explanations (in collaboration with Emily Sullivan)Counterfactual explanations for behaviour change

More info Mykola Pechenizkiy

Mykola Pechenizkiy

-

Enhancing Reconstructive Surgery Decision-Making

The goal of this project would be to come up with a transformer or any other smart solution to (in a one sentence oversimplified description) find mappings between an image of the current patient condition, possible surgery actions and preferred outcome image. A more detailed …

More info Mykola Pechenizkiy

Mykola Pechenizkiy

-

Implementing inference algorithms for choice functions

In recent years, imprecise-probabilistic choice functions have gained growing interest, primarily from a theoretical point of view. These versatile and expressive uncertainty models have demonstrated their capacity to represent decision-making scenarios that extend beyond simple pairwise comparisons of options, accommodating situations of indecision as …

Arthur van Camp

More info

Arthur van Camp

More info Cassio de Campos

Cassio de Campos

-

Generative Random Forests: The Next Level

The work on generative random forests has started, but there is a long way to make them practical. This project aims at studying the drawbacks of such models and improving them with better ensemble ideas, gradient boosting, and/or other techniques already employed with decision …

More info Cassio de Campos

Cassio de Campos

-

Probabilistic Circuits versus Bayesian networks

This project aims to compare two different types of generative models: tractable probabilistic circuits and Bayesian networks of bounded tree-width, and potentially have tools to translate between them (when possible). Probabilistic circuits have been recently applied to a number of tasks, but there is …

More info Cassio de Campos

Cassio de Campos

-

Hybrid Bayesian networks

This internal project aims at developing and testing (for example in classification tasks) a generative model based on probabilistic graphical models for domains with continuous and categorical variables. We want to learn both the graph structure and parameters of such models while constraining their …

More info Cassio de Campos

Cassio de Campos

-

Synthetic data generation for causal learning

An arguably major difficulty for improving causal inferences is the lack of availability of data. While observational data are abundant, interventional data are not. This internal project aims at creating software tools to generate data that can be useful for testing causal learning approaches. …

More info Cassio de Campos

Cassio de Campos

-

Scalable Implementation of Probabilistic Circuits

This internal project aims at designing and development a usable software package for learning and reasoning with probabilistic circuits. Probabilistic circuits are models which can represent complicated mixture models and their computation circuit can be wide and deep. Because they have a structure which …

More info Cassio de Campos

Cassio de Campos

-

Algorithms for forward irrelevance with choice functions

In recent years, imprecise-probabilistic choice functions have gained growing interest, primarily from a theoretical point of view. These versatile and expressive uncertainty models have demonstrated their capacity to represent decision-making scenarios that extend beyond simple pairwise comparisons of options, accommodating situations of indecision as …

Arthur van Camp

More info

Arthur van Camp

More info Cassio de Campos

Cassio de Campos

-

Concepts of independence for choice functions

In recent years, imprecise-probabilistic choice functions have gained growing interest, primarily from a theoretical point of view. These versatile and expressive uncertainty models have demonstrated their capacity to represent decision-making scenarios that extend beyond simple pairwise comparisons of options, accommodating situations of indecision as …

Arthur van Camp

More info

Arthur van Camp

More info Cassio de Campos

Cassio de Campos

-

Local inference algorithms for choice functions

In recent years, imprecise-probabilistic choice functions have gained growing interest, primarily from a theoretical point of view. These versatile and expressive uncertainty models have demonstrated their capacity to represent decision-making scenarios that extend beyond simple pairwise comparisons of options, accommodating situations of indecision as …

Arthur van Camp

More info

Arthur van Camp

More info Cassio de Campos

Cassio de Campos

-

Interventional Whittle Sum-Product Networks

Whittle sum-product networks [1] model the joint distribution of multivariate time series by leveraging the Whittle approximation, casting the likelihood in the frequency domain, and place a complex-valued sum-product network over the frequencies. The conditional independence relations among the time series can then be …

More info Devendra Dhami

Devendra Dhami

-

Dynamic Knowledge Graph Embeddings

Knowledge graph embeddings are an important area of research inside machine learning and has become a necessity due to the importance of reasoning about objects, their attributes and relations in large graphs. There have been several approaches that have been explored and can be …

More info Devendra Dhami

Devendra Dhami

-

Safe reinforcement learning with decision transformers

Safety is a core challenge for the deployment of reinforcement learning (RL) in real-world applications [1]. In applications such as recommender systems, this means the agent should respect budget constraints [2]. In this case, the RL agent must compute a policy condition of the …

More info Thiago Simão

Thiago Simão

-

Learning Decision Trees to Reduce the Sample Complexity of Offline Reinforcement Learning

Reinforcement Learning (RL) deals with problems that can be modeled as a Markov decision process (MDP) where the transition function is unknown. When an arbitrary policy was already in execution, and the experiences with the environment were recorded in a dataset, an offline RL …

More info Thiago Simão

Thiago Simão

-

Reinforcement Learning for Configurable Systems

Nowadays, most software systems are configurable, meaning that we can tailor the settings to the specific needs of each user. Furthermore, we may already have some data available indicating each user's preferences and the software's performance under each configuration. This way, we can compute …

More info Thiago Simão

Thiago Simão

-

Deriving Valuation Bases to Expand GP-Growth to More EMM Model Classes

See PDF

More info Wouter Duivesteijn

Wouter Duivesteijn

-

Deriving Upper Confidence Bounds to Expand Monte-Carlo Tree Search to More EMM Model Classes

See PDF

More info Wouter Duivesteijn

Wouter Duivesteijn

-

Surveying to Bring Order in the Jungle of Supervised Local Pattern Mining Implementations

See PDF

More info Wouter Duivesteijn

Wouter Duivesteijn

-

-

Expanding Exceptional Model Mining on Unstructured Data

See PDF. As attachment, see also https://wwwis.win.tue.nl/~wouter/MSc/Niels.pdf

More info Wouter Duivesteijn

Wouter Duivesteijn

-

Finding the Curse of Dimensionality Sweet Spot Between Traditional Clustering and Deep Clustering

See PDF

Wouter Duivesteijn

More info

Wouter Duivesteijn

More info Sibylle Hess

Sibylle Hess

-

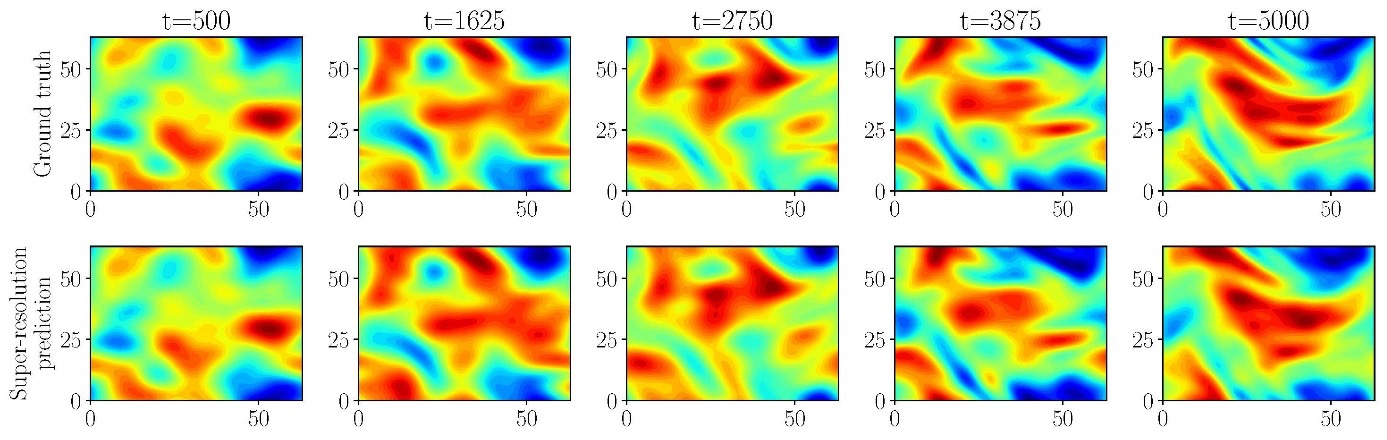

Equivariant Neural Simulators for Probabilistic Fluid Dynamics Simulation

TL;DR: In this project, you will focus on developing a model architecture that can efficiently simulate fluid dynamics, while taking into account the vast amount of domain knowledge in the field in the form of symmetries, as well as the modeling of stochastic effects …

Vlado Menkovski

More info

Vlado Menkovski

More info Koen Minartz

Koen Minartz

-

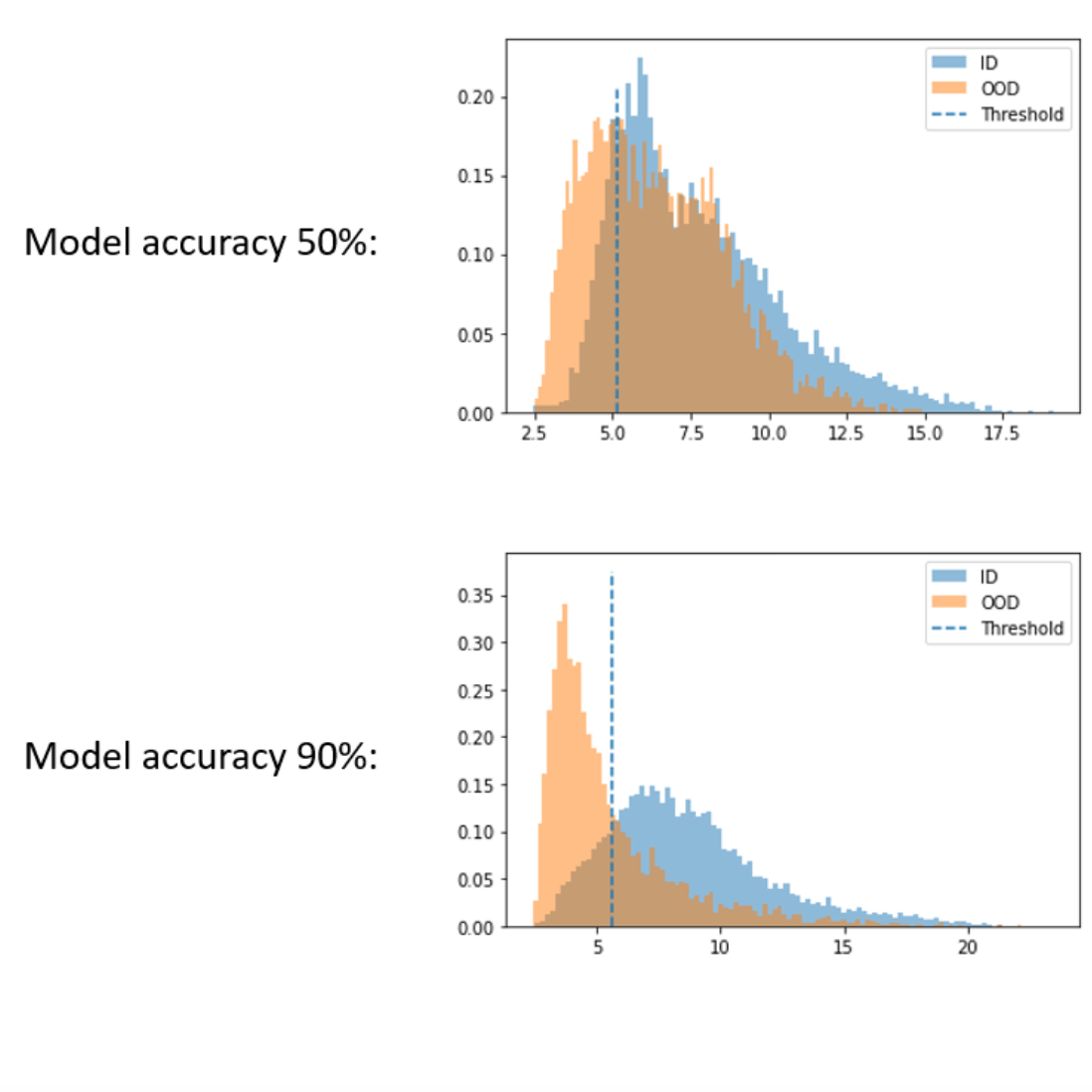

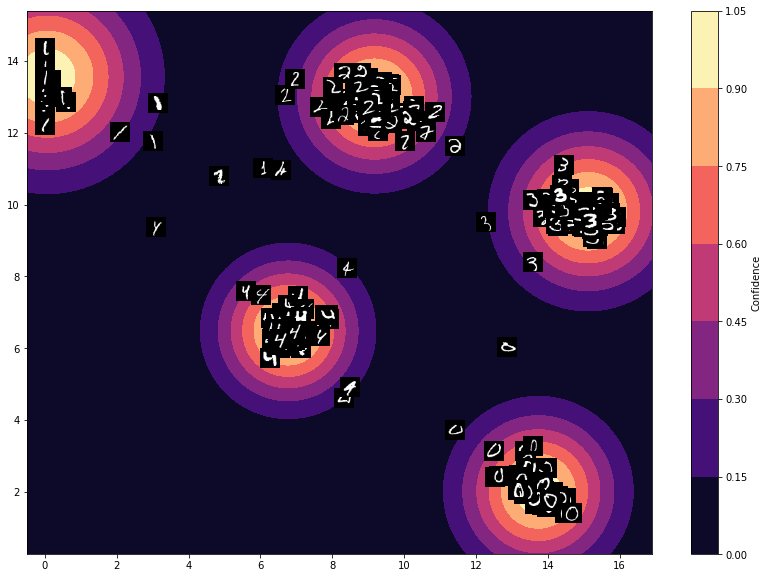

Understanding Out-of-distribution Detection

There are numerous methods for out-of-distribution (OOD) detection and related problems in deep learning, see e.g. [1] for an overview. Many of these however only work well in highly fine-tuned settings and are not well understood in broader context. In this project, you would …

Sibylle Hess

More info

Sibylle Hess

More info Jan Moraal

Jan Moraal

-

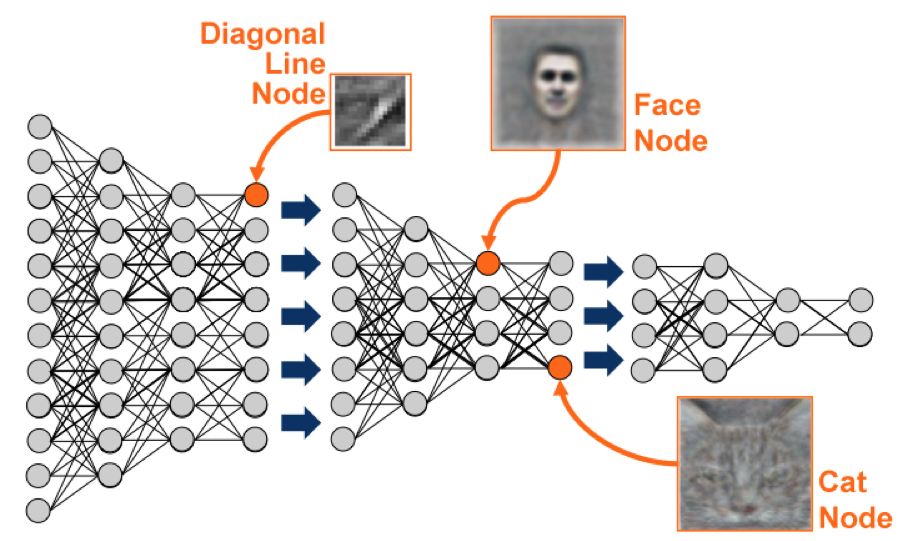

Explaining learned features by generative models

In order to get some insight into the inner workings of deep neural network classifiers, a method that enables the interpretation of learned features would be very helpful. This master project is loosely based on the approach presented in [1], where a GAN is …

Sibylle Hess

More infoWMWil Michiels

Sibylle Hess

More infoWMWil Michiels -

Topics in Deep Clustering

Deep clustering is a well-researched field with promising approaches. Traditional nonconvex clustering methods require the definition of a kernel matrix, whose parameters vastly influence the result, and are hence difficult to specify. In turn, the promise of deep clustering is that a feature transformation …

More info Sibylle Hess

Sibylle Hess

-

Physics-Informed Neural Simulation of Cellular Dynamics

TL;DR: In this project, you will develop a framework for integrating domain knowledge into generative models for cellular dynamics simulations, and apply the method to (synthetic) data of e.g. cancer cell migration. Project description: Studying the variety of mechanisms through which cells migrate and interact …

Vlado Menkovski

More info

Vlado Menkovski

More info Koen Minartz

Koen Minartz

-

ML Simulation of Nuclear Fusion reactors

Thermonuclear fusion holds the promise of generating clean energy on a large scale. One promising approach for controlled fusion power generation is the tokamak, a torus-shaped device that magnetically confines the fusion plasma in its vessel. Currently, not all physical processes in these plasmas …

Vlado Menkovski

More info

Vlado Menkovski

More info Yoeri Poels

Yoeri Poels

-

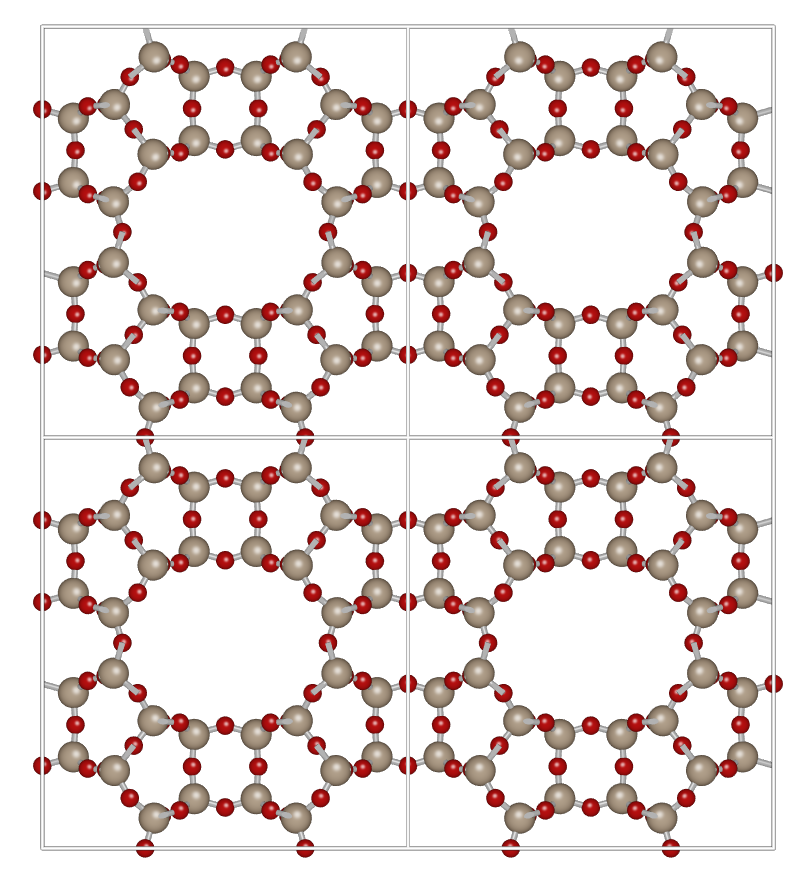

Conditional Generation of Materials for Carbon Capture

n recent years, the urgency of addressing the climate crisis, resulting from escalating greenhouse gas emissions, has increased. A potential solution for the increasing amount of CO2 in the air is carbon capture. Zeolites are potential candidate materials for carbon capture, as they are …

Vlado Menkovski

More infoMPMarko Petkovic

Vlado Menkovski

More infoMPMarko Petkovic -

Evaluating Explanations of Model Predictions

The black-box nature of neural networks prohibits their application in impactful areas, such as health care or generally anything that would have consequences in the real world. In response to this, the field of Explainable AI (XAI) emerged. State-of-the-art methods in XAI define a …

Sibylle Hess

More infoWMWil Michiels

Sibylle Hess

More infoWMWil Michiels -

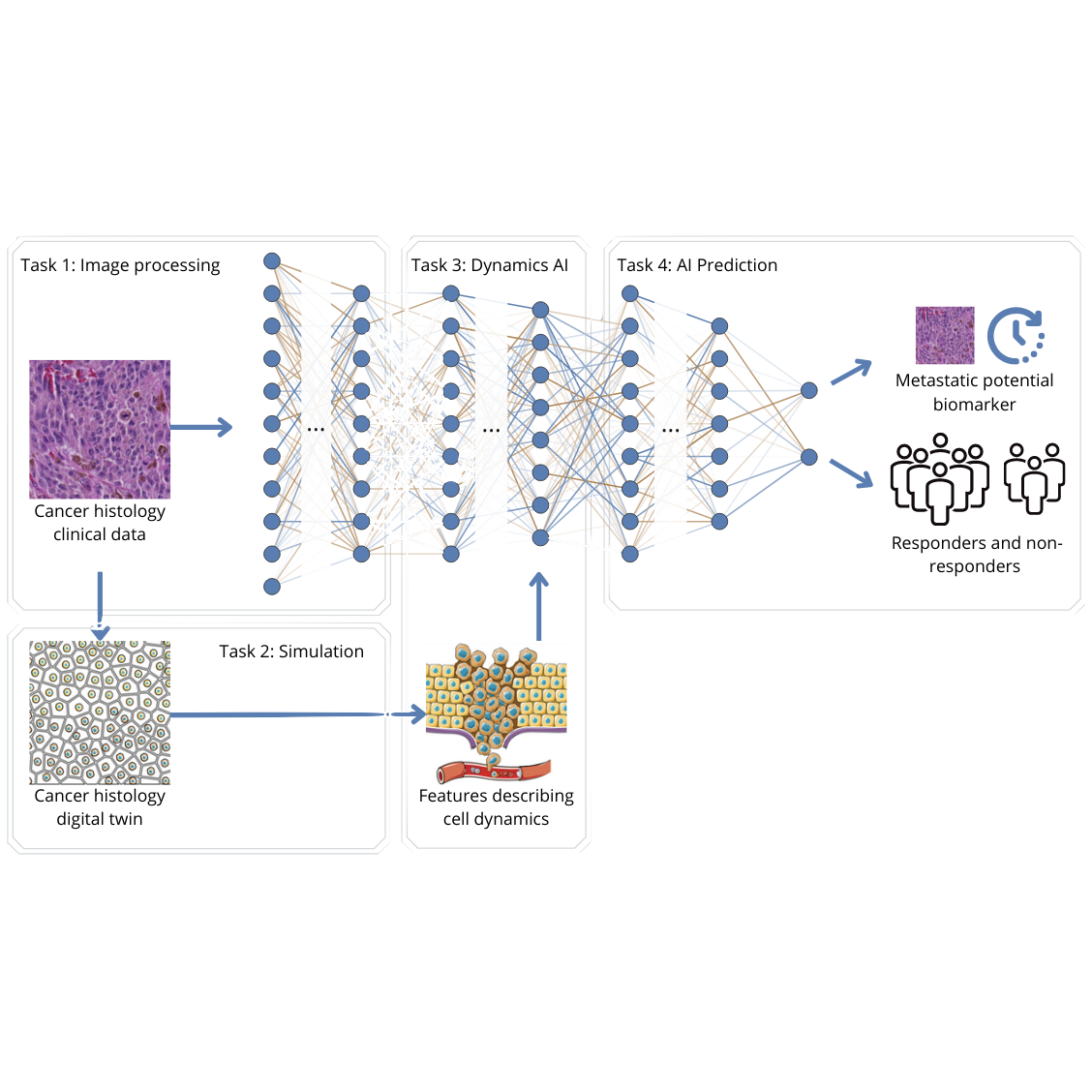

Physics Informed AI for improved cancer prognosis

In order to metastasize, cancer cells need to move. Estimating the ability for cells to move, i.e. their dynamics, or so-called migration potential, is a promising new indicator for cancer patient prognosis (overall survival) and response to therapy. However, predicting the migration potential from …

Sibylle Hess

More infoSVSecondary supervisors could be Liesbeth Janssen or Mitko Vetka.

Sibylle Hess

More infoSVSecondary supervisors could be Liesbeth Janssen or Mitko Vetka. -

Comparison of E(n)-equivariant GNNs for metamaterial simulation

Soft, porous metamaterials are materials that consist of a flexible base material (e.g., rubber-like material) with pores of a carefully designed shape in it. Under external loading (a pressure applied on the outside surface, mechanical constraints, or other interactions), they deform which in turn …

Vlado Menkovski

More infoOHOndrej Rokos, Fleur Hendriks

Vlado Menkovski

More infoOHOndrej Rokos, Fleur Hendriks -

A survey on correlations and similarities for spatiotemporal data

Finding pairs of locations that present interesting correlations or similarities (e.g., in their weather, development rate, or population statistics through time) can provide useful insights in different contexts/domains. For example, if a country observes that two different cities have a high similarity on the …

More info Odysseas Papapetrou

Odysseas Papapetrou

-

Discovery and maintenance of heavy hitters over sliding windows, in a distributed environment.

Synopses are extensively used for summarizing high-frequency streaming data, e.g., input from sensors, network packets, financial transactions. Some examples include Count-Min sketches, Bloom filters, AMS sketches, samples, and histogram. This project will focus on designing, developing, and evaluating synopses for the discovery of heavy …

More info Odysseas Papapetrou

Odysseas Papapetrou

-

Detection of similarities and correlations in multidimensional time series

Correlations are extensively used in all data-intensive disciplines, to identify relations between the data (e.g., relations between stocks, or between medical conditions and genetic factors). Most algorithms consider one-dimensional time series. For example, in the context of finance, the time series might represent the …

More info Odysseas Papapetrou

Odysseas Papapetrou

-

Lagged multivariate correlations

Correlations are extensively used in all data-intensive disciplines, to identify relations between the data (e.g., relations between stocks, or between medical conditions and genetic factors). The 'industry-standard' correlations are pairwise correlations, i.e., correlations between two variables. Multivariate correlations are correlations between three or more …

More info Odysseas Papapetrou

Odysseas Papapetrou

-

A Counterpart of SQL for Matrices and Tensors

Most commercial databases are relational and use SQL to query the data. Often, however, data is not relational. Indeed, data scientists often deal with matrices instead of relations. A counterpart of SQL for the matrices and tensors is therefore needed, and initial progress has …

More info Robert Brijder

Robert Brijder

-

Efficient Unbiased Training of Large-scale Distributed RL

It is widely known that training deep neural networks on huge datasets improves learning. However, huge datasets and deep neural networks can no longer be trained on a single machine. One common solution is to train using distributed systems. In addition to traditional data-centers, …

Maryam Tavakol

More infoARAli Ramezani-Kebrya

Maryam Tavakol

More infoARAli Ramezani-Kebrya -

Programming Database Theory: Using a theorem prover to formalize database theory

Proving a theorem is similar to programming: in both cases the solution is a sequence of precise instructions to obtain the output/theorem given the input/assumptions. In fact, there are programming languages such as Lean, Coq, and Isabelle that can be used to prove theorems. …

More info Robert Brijder

Robert Brijder

-

Peer-to-peer Federated Learning

--update--: This project is now taken by Davis EisaksThe goal of this project is to study how to train a machine learning model in a gossip-based approach, where if two devices (e.g smartwatches) pass each other in the physical space, they could exchange part of …

Mykola Pechenizkiy

More infoTDTim d'Hondt

Mykola Pechenizkiy

More infoTDTim d'Hondt -

Bayesian Federated Learning using node-based BNNs.

Node-based BNNs assign latent noise variables to hidden nodes of a neural network. By restricting inference to the node-based latent variables, node stochasticity greatly reduces the dimension of the posterior. This allows for inference of BNNs that are cheap to compute and to communicate, …

Mykola Pechenizkiy

More infoTDTim d'Hondt

Mykola Pechenizkiy

More infoTDTim d'Hondt -

ML projects at ASML

ASML has recently re-confirmed there two projects; a couple more will likely be confirmed in the coming weeksXAI in Exceptional Model Mining (--- update --- this project is taken by Yasemin Yasarol)In the semiconductor industry there are different, diverse and unique failure modes that impact …

Mykola Pechenizkiy

More infoTTtbc

Mykola Pechenizkiy

More infoTTtbc -

ML projects at Floryn

--- update --- These projects are no longer available. Theonymfi Anogeianaki will work on FairML.1. Bayesian inferenceWe have been doing ‘traditional’ machine learning for years now at Floryn but never investigated Bayesian modeling. We currently make use of probability measures that come from our (frequentist) machine learning …

More info Mykola Pechenizkiy

Mykola Pechenizkiy

-

Computational Complexity of Probabilistic Circuits

This internal project aims at studying and devising new bounds for the computational complexity of inferences in probabilistic circuits and their robust/credal counterpart, including approximation results and fixed-parameter tractability. It requires mathematical interest and good knowledge of theory of computation. This is a theoretical …

More info Cassio de Campos

Cassio de Campos

-

Learning Bayesian Networks in a Single Step

This internal project aims at implementing a new approach to learning the structure and parameters of Bayesian networks. It is mostly an implementation project, as the novel ideas are already established (but never published, so the approach is novel). It requires high expertise in …

More info Cassio de Campos

Cassio de Campos

-

Personalized research project in graph data management

This is a wildcard for projects in (knowledge) graph data management.If you took EDS (Engineering Data Systems) and liked what we did there, we offer research+engineering projects in the scope of our database engine AvantGraph (AvantGraph.io). Topics include (but not limited to):- graph query …

Nick Yakovets

More info

Nick Yakovets

More info Bram van de Wall

Bram van de Wall

-

Continual Structure from Motion

Autonomous vehicles and robots need 3D information such as depth and pose to traverse paths safely and correctly. Classical methods utilize hand-crafted features that can potentially fail in challenging scenarios, such as those with low texture [1]. Although neural networks can be trained on …

Bahram Zonooz

More info

Bahram Zonooz

More infoElahe Arani

-

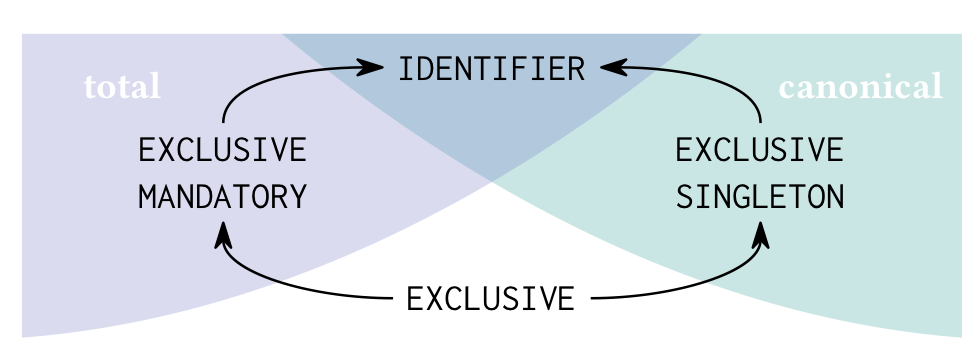

Schema language engineering in AvantGraph

Schema languages are critical for data system usability, both in terms of human understanding and in terms of system performance [0]. The property graph data model is part of the upcoming ISO standards around graph data management [4]. Developing a standard schema language for …

More info George Fletcher

George Fletcher

-

Feature selection, sparse neural networks, truly sparse implementations, and societal challenges

Context of the work: Deep Learning (DL) is a very important machine learning area nowadays and it has proven to be a successful tool for all machine learning paradigms, i.e., supervised learning, unsupervised learning, and reinforcement learning. Still, the scalability of DL models is …

Mykola Pechenizkiy

More info

Mykola Pechenizkiy

More info Ghada Sokar

Ghada Sokar

-

Diversifying attention through randomization in sparse neural networks training

Context of the work: Deep Learning (DL) is a very important machine learning area nowadays and it has proven to be a successful tool for all machine learning paradigms, i.e., supervised learning, unsupervised learning, and reinforcement learning. Still, the scalability of DL models is …

Mykola Pechenizkiy

More info

Mykola Pechenizkiy

More info Ghada Sokar

Ghada Sokar

-

Topics in Continual Lifelong Learning

Nowadays, data changes very rapidly. Every day new trends appear on social media with millions of images. New topics rapidly emerge from the huge number of videos uploaded on Youtube. Attention to continual lifelong learning has recently increased to cope with this rapid data …

Mykola Pechenizkiy

More info

Mykola Pechenizkiy

More info Ghada Sokar

Ghada Sokar

-

Multi-modal Representation Learning and Applications

With the rapid development of multi-media social network platforms, e.g., Instagram, Tiktok, etc., more and more content is generated in the multi-modal format rather than pure text. This brings new challenges for researchers to analyze the user generated content and solve some concrete problems …

Yulong Pei

More info

Yulong Pei

More info Tianjin Huang

Tianjin Huang

-

Architectural Analysis of Vision Transformers in Continual Learning

Deep neural networks (DNN) deployed in the real world are frequently exposed to non-stationary data distributions and required to sequentially learn multiple tasks. This requires that DNNs acquire new knowledge while retaining previously obtained knowledge. However, continual learning in DNNs, in which networks are …

Elahe Arani

More info Bahram Zonooz

Bahram Zonooz

-

Human Visual System Inspired Mechanisms for Data Curation

Every second, around 107 to 108 bits of information reach the human visual system (HVS) [IK01]. Because biological hardware has limited computational capacity, complete processing of massive sensory information would be impossible. The HVS has therefore developed two mechanisms, foveation and fixation, that preserve perceptual performance …

Bahram Zonooz

More info

Bahram Zonooz

More infoElahe Arani

-

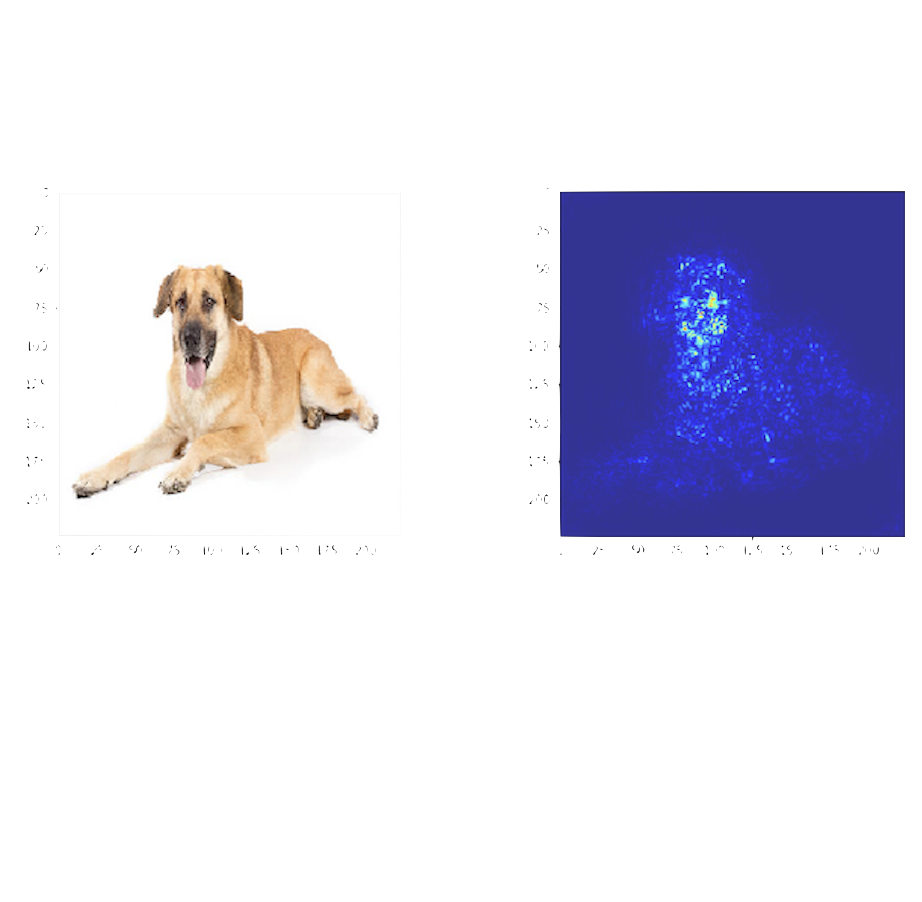

Human Visual System Inspired Mechanisms for Interpretability

Every second, around 107 to 108 bits of information reach the human visual system (HVS) [IK01]. Because biological hardware has limited computational capacity, complete processing of massive sensory information would be impossible. The HVS has therefore developed two mechanisms, foveation and fixation, that preserve perceptual performance …

Elahe Arani

More info Bahram Zonooz

Bahram Zonooz

-

Human Visual System Inspired Mechanisms for Video Action Recognition/Prediction

Every second, around 107 to 108 bits of information reach the human visual system (HVS) [IK01]. Because biological hardware has limited computational capacity, complete processing of massive sensory information would be impossible. The HVS has therefore developed two mechanisms, foveation and fixation, that preserve perceptual …

Bahram Zonooz

More info

Bahram Zonooz

More infoElahe Arani

-

Online knowledge distillation for self-supervised learning

Self-supervised learning [1, 2] solves pretext prediction tasks that do not require annotations in order to learn feature representations. Recent empirical research has demonstrated that deeper and wider models benefit more from task-agnostic use of unlabeled data than their smaller counterparts; i.e., smaller models …

Bahram Zonooz

More info

Bahram Zonooz

More infoElahe Arani

-

Cardinality estimation for factorized query processing

It is well-known that processing of complex analytical queries over large graph datasets introduces a major pain point - runtime memory consumption. To address this, recently, a method based on factorized query processing (FQP) has been proposed. It has been shown that this method …

More info Nick Yakovets

Nick Yakovets

-

Deep Clustering: Simultaneous Optimization of Representations and Clustering

Deep clustering is a well-researched field with promising approaches. Traditional nonconvex clustering methods require the definition of a kernel matrix, whose parameters vastly influence the result, and are hence difficult to specify. In turn, the promise of deep clustering is that a feature transformation …

More info Sibylle Hess

Sibylle Hess

-

Building benchmarks for modern graph databases

There exists a wide variety of benchmarks available for graph databases: both synthetic and real-world-based. However, one important problem with current state of the art in graph database benchmarking is that all of the existing benchmarks are inherently based on workloads from relational databases, …

More info Nick Yakovets

Nick Yakovets

-

Overcoming data scarcity in visual object detection and recognition tasks with frugal learning

IntroductionThe Observe, Orient, Decide and Act (OODA) loop [1] shapes most modern military warfare doctrines. Typically, after gathering sensor and intelligence data in the Observe step, a common tactical operating picture of the monitored aerial, maritime and/or ground scenario is built and shared among …

Mykola Pechenizkiy

More info

Mykola Pechenizkiy

More info Stiven Schwanz Dias

Stiven Schwanz Dias

-

Achieving main-memory query processing performance on secondary storage on graph query workloads

Since DRAM is still relatively expensive and contemporary graph database workloads operate with billion-node-scale graphs, contemporary graph database engines still have to rely on secondary storage for query processing. In this project, we explore how novel techniques such as variable-page sizes and pointer swizzling can …

Nick Yakovets

More info

Nick Yakovets

More info Bram van de Wall

Bram van de Wall

-

Fairness-aware Influence Minimization for Combating Fake News

Influence blocking and fake news mitigation have been the main research direction for the network science and data mining research communities in the past few years. Several methods have been proposed in this direction [1]. However, none of the proposed solutions has proposed feature-blind …

Mykola Pechenizkiy

More info

Mykola Pechenizkiy

More info Akrati Saxena

Akrati Saxena

-

Social Media Data Analysis - Twitter Case Study

In this project, we will analyze social media dataset to answer interesting questions about human behavior. We aim to study biases using social media data and propose fair solutions. The project also aims to model human behavior on social media (depends on the topic).This …

George Fletcher

More info

George Fletcher

More info Akrati Saxena

Akrati Saxena

-

Fairness in Network Anonymization

In the past 10-15 years, a massive amount of social networking data has been released publicly and analyzed to better understand complex networks and their different applications. However, ensuring the privacy of the released data has been a primary concern. Most of the graph …

Mykola Pechenizkiy

More info

Mykola Pechenizkiy

More info Akrati Saxena

Akrati Saxena

-

Fairness-aware Community Detection Method

In real-world networks, nodes are organized into communities and the community size follows power-law distribution. In simple words, there are a few communities of bigger size and many communities of small size. Several methods have been proposed to identify communities using structural properties of …

George Fletcher

More info

George Fletcher

More info Akrati Saxena

Akrati Saxena

-

Towards Cognitive-inspired Adversarial Training Approach

Deep neural networks (DNN) are achieving superior performance in perception tasks; however, they are still riddled with fundamental shortcomings. There are still core questions about what the network is truly learning. DNNs have been shown to rely on local texture information to make decisions, …

Elahe Arani

More info Bahram Zonooz

Bahram Zonooz

-

Generalizable, fair and explainable default predictors

Context:Financial sector is a tightly regulated environment. All models used in the financial sector, are studied under the microscope of developers, validators, regulators, and eventually the end users – the clients, before these models can be deployed and used.To assess whether a customer should be …

Mykola Pechenizkiy

More infoDDDLL

Mykola Pechenizkiy

More infoDDDLL -

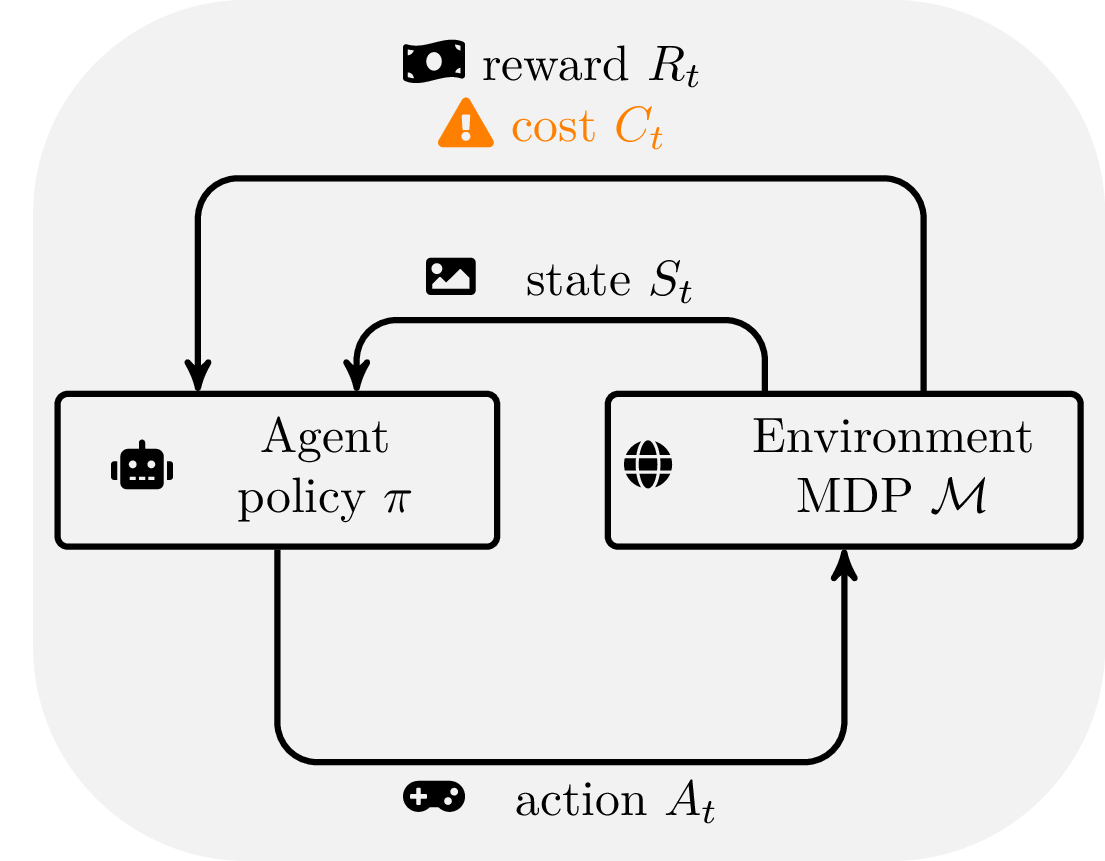

Data-Efficient Reinforcement Learning under Constraints

Reinforcement learning (RL) is a general learning, predicting, and decision-making paradigm and applies broadly in many disciplines, including science, engineering, and humanities. Conventionally, classical RL approaches have seen prominent successes in many closed world problems, such as Atari games, AlphaGo, and robotics. However, dealing …

Mykola Pechenizkiy

More info

Mykola Pechenizkiy

More info Danil Provodin

Danil Provodin

-

Input Adaptive Inference for Semantic Segmentation

Neural networks typically consist of a sequence of well-defined computational blocks that are executed one after the other to obtain an inference for an input image. After the neural network has been trained, a static inference graph comprising these computational blocks is executed for …

Bahram Zonooz

More info

Bahram Zonooz

More infoElahe Arani

-

Explaining schema conformance for knowledge graphs: conformance reporting for WikiProjects members

Wikidata is an open collaboratively built knowledge base. In the Wikidata community groups of editors who share interest in specific topics form WikiProjects. As part of their regular work, members of WikiProjects would like to regularly test the conformance of entity data in Wikidata against schemas for entity classes. …

George Fletcher

More infoKUKatherine Thornton, Yale University (USA)

George Fletcher

More infoKUKatherine Thornton, Yale University (USA) -

Improving knowledge graph completeness with schemas: Wikidata and ShEx

In the collaboratively built knowledge base Wikidata some editors would appreciate suggestions of how to improve the completeness of items. Currently some community members use an existing tool, Recoin, described in this paper, to get suggestions of relevant properties to use to contribute additional statements. This process could …

George Fletcher

More infoKUKatherine Thornton, Yale University (USA)

George Fletcher

More infoKUKatherine Thornton, Yale University (USA) -

SIMD-based JSON data processing in a dynamic Language VM

The JSON data format is one of the most popular human-readable data formats, and is widely used in Web and Data-intensive applications. Unfortunately, reading (i.e., parsing) and processing JSON data is often a performance bottleneck due to the inherent textual nature of JSON. Recent …

More info Daniele Bonetta

Daniele Bonetta

-

ML-based compiler auto-tuning in GraalVM

Machine-learning based approaches [3] are increasingly used to solve a number of different compiler optimization problems. In this project, we want to explore ML-based techniques in the context of the Graal compiler [1] and its Truffle [2] language implementation framework, to improve the performance …

More info Daniele Bonetta

Daniele Bonetta

-

Dynamic SQL query compilation in GraalVM

Data processing systems such as Apache Spark [1] rely on runtime code generation [2] to speedup query execution. In this context, code generation typically translates a SQL query to some executable Java code, which is capable of delivering high performance compared to query interpretation. …

More info Daniele Bonetta

Daniele Bonetta

-

ML-based Profile-guided optimization in GraalVM

Profile-guided optimization (PGO) [1] is a compiler optimization technique that uses profiling data to improve program runtime performance. It relies on the intuition that runtime profiling data from previous executions can be used to drive optimization decisions. Unfortunately, collecting such profile data is expensive, …

More info Daniele Bonetta

Daniele Bonetta

-

Sea-of-nodes graphs query and visualization

Language Virtual Machines such as V8 or GraalVM [3] use Graphs to represent code. One example Graph representation is the so-called Sea-of-nodes model [1]. Sea-of-nodes graphs of real-world programs have millions of edges, and are typically very hard to query, explore, and analyze. In …

More info Daniele Bonetta

Daniele Bonetta

-

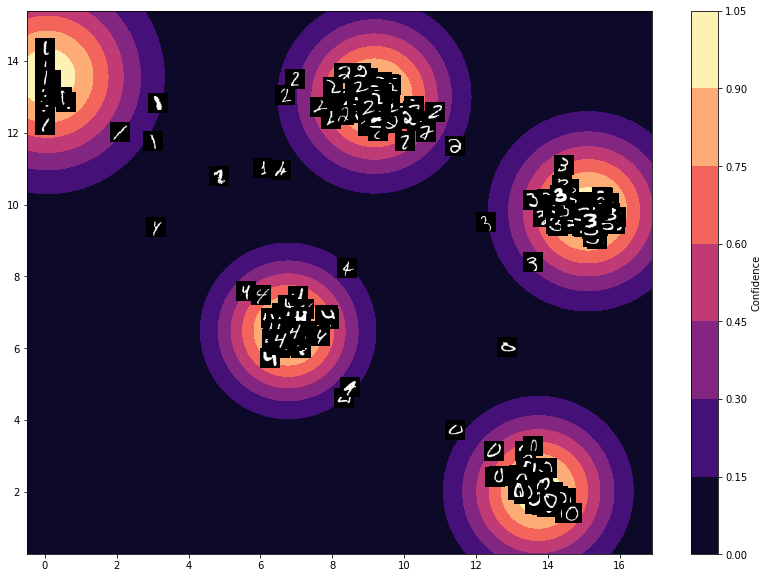

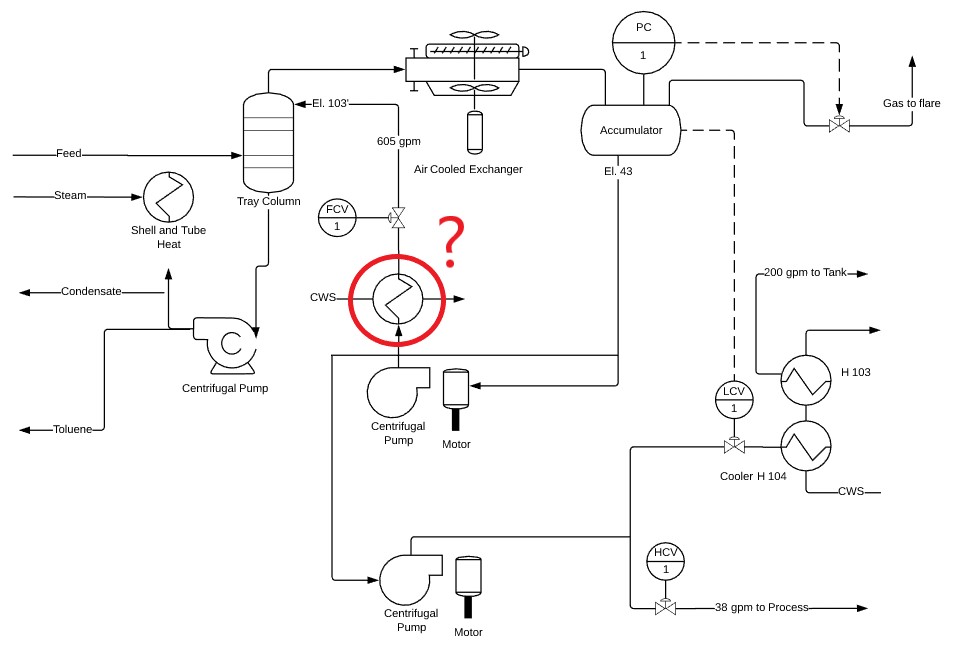

Robust symbol detection and recognition in piping and instrumentation diagrams

Project description This project is concerned with the recognition of symbols of piping and process equipment together with the instrumentation and control devices that appear on piping and instrumentation diagrams (P&ID). Each item on the P&ID is associated with a pipeline. Piping engineers often receive drawings …

Mykola Pechenizkiy

More info

Mykola Pechenizkiy

More info Stiven Schwanz Dias

Stiven Schwanz Dias

-

Battle of the credal networks: strong independence or forward irrelevance?

Bayesian networks are a popular model in AI. Credal networks are a robust version of Bayesian networks created by replacing the conditional probability mass functions describing the nodes by conditional credal sets (sets of probability mass functions). Next to their nodes, Bayesian networks are …

More info Erik Quaeghebeur

Erik Quaeghebeur

-

Fairness Analysis in Anomaly Detection

In anomaly detection, we aim to identify unusual instances in different applications, including malicious users detection in OSNs, fraud detection, and suspicious bank transaction detection. Most of the proposed anomaly detection methods are dependent on network structure as some specific structural pattern can convey …

Mykola Pechenizkiy

More info

Mykola Pechenizkiy

More info Akrati Saxena

Akrati Saxena

-

Curiosity driven fairness in Reinforcement learning

Reinforcement learning (RL) is a computational approach to automating goal-directed decision making. In this project, we will use the framework of Markov decision processes. Fairness in reinforcement learning [1] deals with removing bias from the decisions made by the algorithms. Bias or discrimination in …

Mykola Pechenizkiy

More info

Mykola Pechenizkiy

More info Pratik Gajane

Pratik Gajane

-

Generate fair (pseudo) samples for reinforcement learning

Reinforcement learning (RL) is a computational approach to automating goal-directed decision making. Reinforcement learning problems use either the framework of multi-armed bandits or Markov decision processes (or their variants). In some cases, RL solutions are sample inefficient and costly. To address this issue, some …

Mykola Pechenizkiy

More info

Mykola Pechenizkiy

More info Pratik Gajane

Pratik Gajane

-

Causal perspective of fairness in reinforcement learning

Reinforcement learning (RL) is a computational approach to automating goal-directed decision making using the feedback observed by the learning agent. In this project, we will be using the framework of multi-armed bandits and Markov decision processes. Observational data collected from real-world systems can mostly …

Mykola Pechenizkiy

More info

Mykola Pechenizkiy

More info Pratik Gajane

Pratik Gajane

Assigned Projects (16)

-

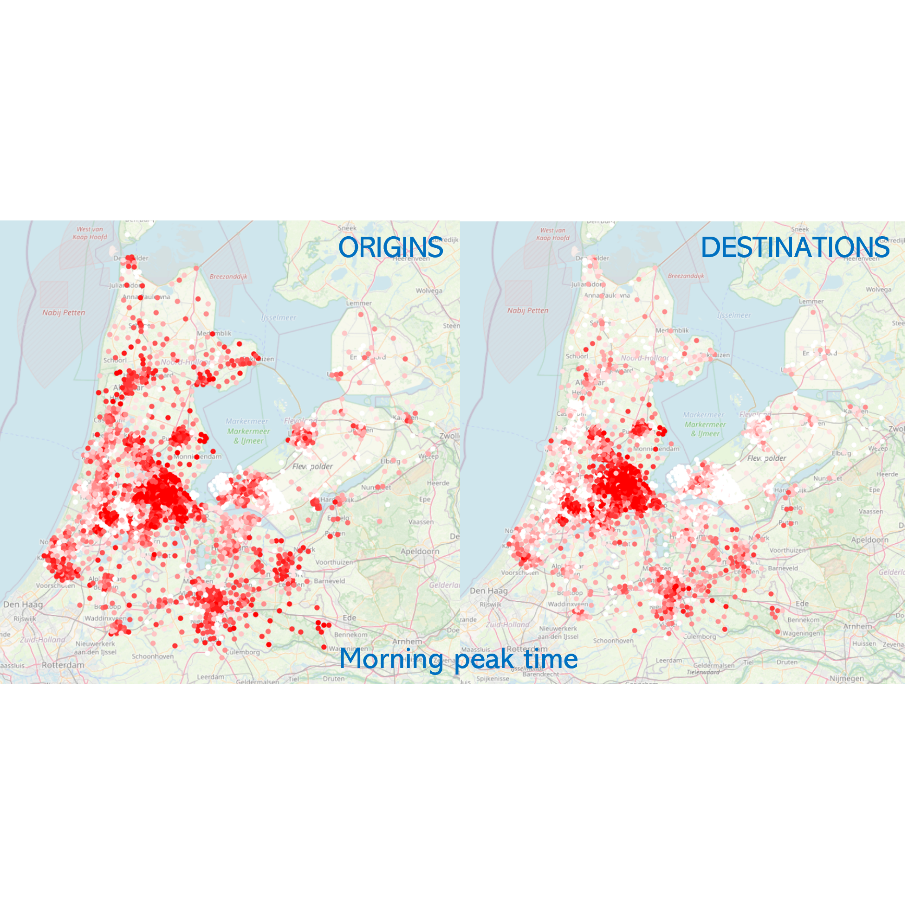

[Internship at TNO] Dynamic road space allocation with shared mobility hubsNov 2024

The XCARCITY project investigates how to facilitate and support implementation of car-free areas in Amsterdam, Almere Pampus and Metropoolregio Rotterdam Den Haag.Car-free and car-low areas offer many benefits by freeing up road space, reducing congestion and parking requirements, and generally contributing to increased livability …

DVDido Verstegen Thiago Simão

More infoCPCanmanie T. Ponnambalam

Thiago Simão

More infoCPCanmanie T. Ponnambalam -

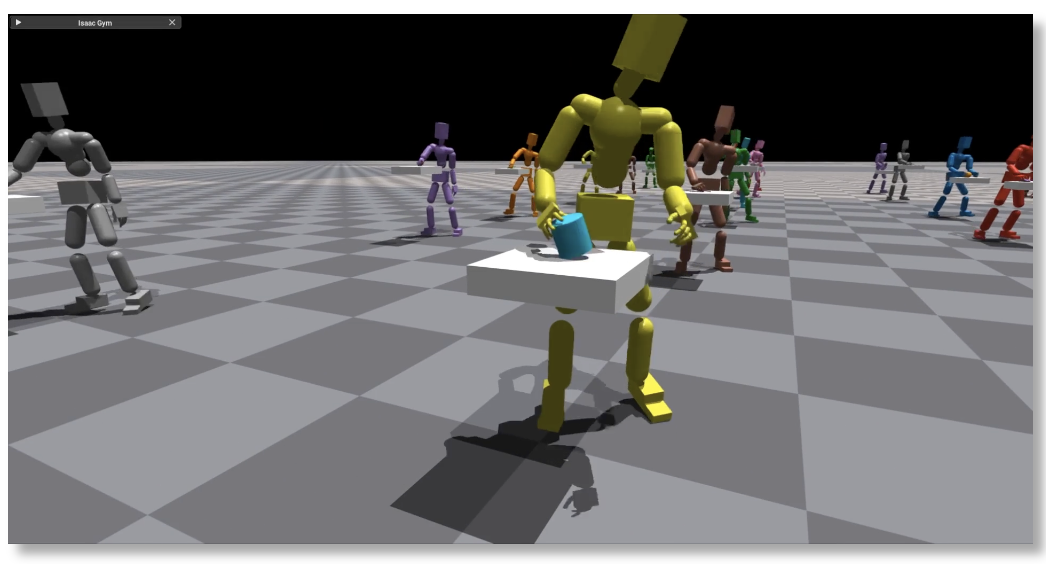

Reinforcement Learning for Contact-rich and Impact-aware Robotic TasksNov 2024

Reinforcement Learning (RL) [6] has achieved successful outcomes in multiple applications, including robotics [1]. A key challenge to deploying RL in such a scenario is to ensure the agent is robust so it does not lose performance even if the environment's geometry and dynamics …

Bram Grooten

More info

Bram Grooten

More info Thiago Simão

Thiago Simão

-

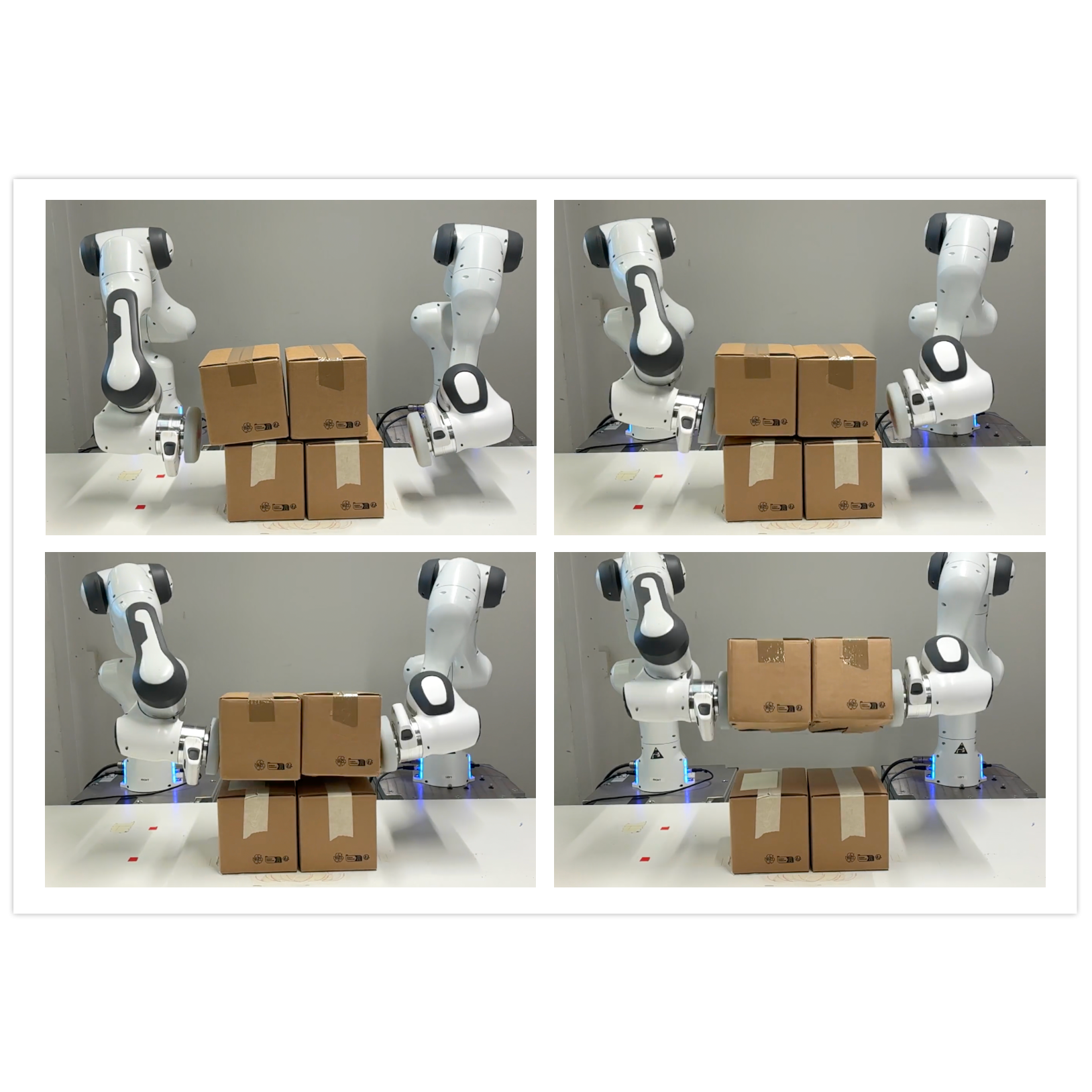

Dual arm manipulation of heavy objects with humanoid robots via reinforcement learningNov 2024

Motivation. Reinforcement Learning(RL; Sutton and Barto 2018) has achieved successful outcomes in multiple applications, including robotics(Kober, Bagnell, and Peters 2013). A key challenge to deploying RL in such a scenario is to ensure the agent is robust so it does not lose performance even …

Bram Grooten

More info

Bram Grooten

More info Thiago Simão

Thiago Simão

-

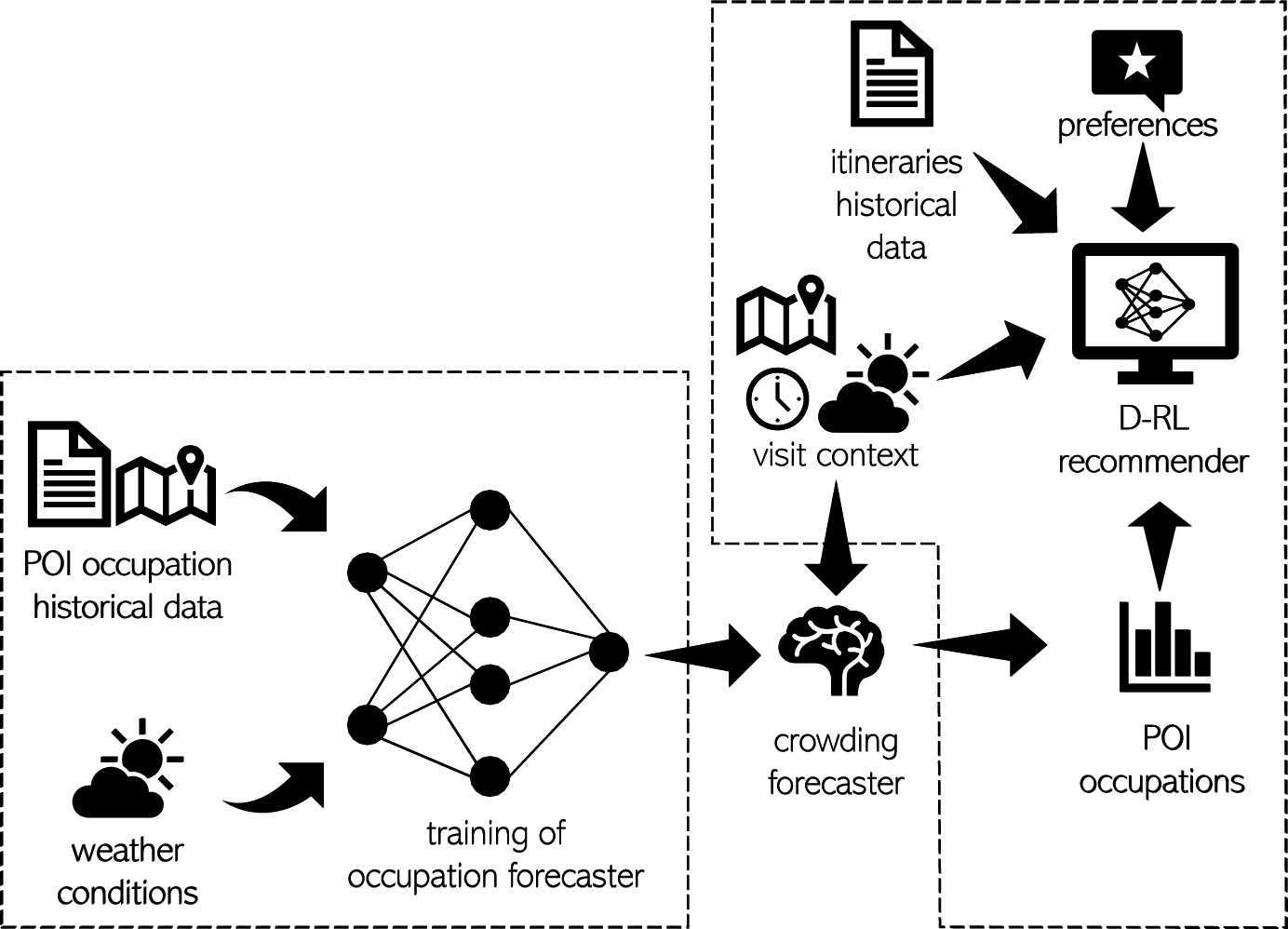

Multi-agent reinforcement learning for sustainable touristic recommender systemNov 2024

A touristic recommender system (TRS; Dalla Vecchia et al., 2024; Gaonkar et al., 2018; de Nijs et al., 2018) often provides to its users a sequence of recommendations instead of a single suggestion to optimize the user experience in the available time interval. Due …

PDPaul Dewez Thiago Simão

More infoEQElisa Quintarelli

Thiago Simão

More infoEQElisa Quintarelli -

Adversarial attacks for safe transfer in reinforcement learningNov 2024

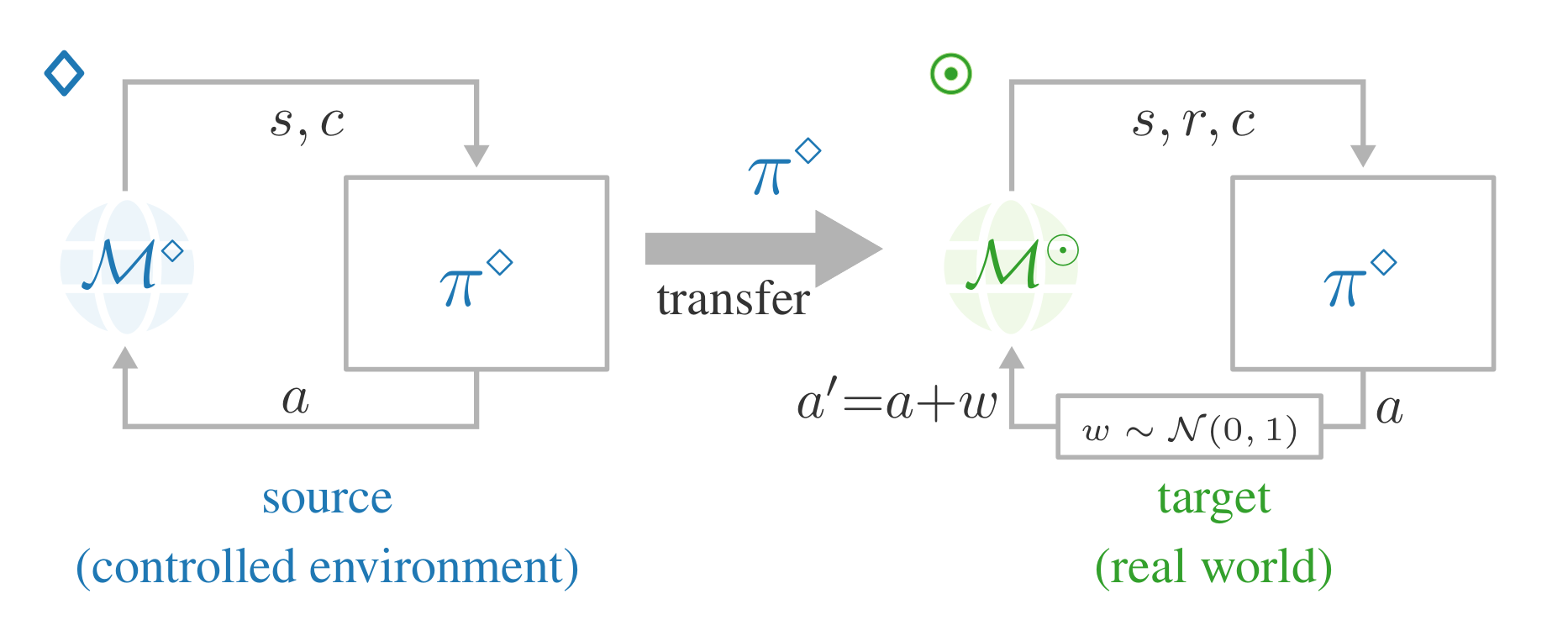

Safety is a paramount challenge for the deployment of autonomous agents. In particular, ensuring safety while an agent is still learning may require considerable prior knowledge (Carr et al., 2023; Simão et al., 2021). A workaround is to pre-train the agent in a similar …

CMCheuk Lam MoMore info Thiago Simão

Thiago Simão

-

High-performance Safe RL BenchmarkingNov 2024

As AI systems become increasingly integral to critical sectors, ensuring their safety and reliability is essential. Reinforcement Learning (RL) is a prominent method that learns optimal behaviors through trial-and-error interactions with a dynamic environment. Yet, the stakes are high: in physical settings, a wrong …

MBMourad Boustani Tristan Tomilin

More info

Tristan Tomilin

More info Thiago Simão

Thiago Simão

-

Understanding deep learning: efficient retraining of networksJul 2024

Recent work has shown that neural networks, such as fully connected networks and CNNs, learn to distinguish between classes from broader to finer distinctions between those classes [1,2] (see Fig. 1). Figure 1: Illustration of the evolution of learning from broader to finer distinctions between …

More info Hannah Pinson

Hannah Pinson

-

Understanding deep learning: the initial learning rateJul 2024

This project is finished/closed. While deep learning has become extremely important in industry and society, neural networks are often considered ‘black boxes’, i.e., it is often believed that it is impossible to understand how neural networks really work. However, there are a lot of …

More info Hannah Pinson

Hannah Pinson

-

Pimp my BUS: Improving a Minicluster-based Deterministic Pattern Sampling Algorithm for Exceptional Model MiningNov 2023

See PDF. As attachment, see also https://wwwis.win.tue.nl/~wouter/MSc/Bart.pdf

LKLars KuijtenMore info Wouter Duivesteijn

Wouter Duivesteijn

-

Preventing Beam Pollution: Defining an Empirical Protocol to Improve Beam Search Lattice TraversalOct 2023

See PDF

BSBart SlendersMore info Wouter Duivesteijn

Wouter Duivesteijn

-

Diversity of recommendations in collaboration with Bol.comFeb 2023

Recommender Systems (RSs) have emerged as a way to help users find relevant information as online item catalogs increased in size. There is an increasing interest in systems that produce recommendations that are not only relevant, but also diverse [1]. In addition to users, increased …

Mykola Pechenizkiy

More info

Mykola Pechenizkiy

More info Hilde Weerts

Hilde Weerts

-

Simulation of Nanopore sequencing (with generative models)Jan 2023

---UPDATE---: This project is now taken by Jonas NiederleNanopore sequencing is a third-generation sequencing method that directly measures long DNA or RNA (Figure 1). The method works by translocating a single DNA strand through a Nanopore in which an electric current signal is measured. The …

More info Vlado Menkovski

Vlado Menkovski

-

Bubble simulation with latent variable modelsNov 2022

--update--: This project is now taken byTijs TeulingsThe topic of the project is simulation of bubbles with deep generative models. Bubbles are a fascinating phenomenon in multiphase flow, and they play an important role in chemical, industrial processes. Bubbles can be simulated well with …

More info Vlado Menkovski

Vlado Menkovski

-

Aspect-based Few-shot classification (Meta-learning)Nov 2022

--- UPDATE ---: This project is now taken by Tim van EngelandMeta-learning (also referred to as learning to learn) is a set of Machine Learning techniques that aim to learn quickly from a few given examples in changing environments [1]. One instantiation of the meta-learning …

More info Vlado Menkovski

Vlado Menkovski

-

Exceptional Gestalt Mining (EGM)Nov 2022

See PDF

PRPim Rietjens Wouter Duivesteijn

More infoTBThomas C. van Dijk, Ruhr-Universität Bochum

Wouter Duivesteijn

More infoTBThomas C. van Dijk, Ruhr-Universität Bochum -

Generating Missing At Random (MAR) data in Images; a Convolutional ApproachNov 2022

See PDF

SHSam Al Habash Wouter Duivesteijn

More info

Wouter Duivesteijn

More info Vlado Menkovski

Vlado Menkovski

Finished Projects (28)

-

Language Agents for Playing Card GamesDec 2024

The project is a pioneering initiative that combines Natural Language Processing (NLP) and Reinforcement Learning (RL) methodologies to create intelligent agents capable of understanding natural language instructions and participating in playing card games. This project aims to develop AI-driven agents that not only comprehend …

Meng Fang

More info

Meng Fang

More info Yudi Zhang

Yudi Zhang

-

Playing Text-based Games with Large Language ModelsDec 2024

The project aims to explore the utilization of sophisticated language models in the domain of text-based games. This endeavor seeks to harness the capabilities of large language models, such as GPT (Generative Pre-trained Transformer), in the context of interactive narratives, text adventures, and other …

Meng Fang

More info

Meng Fang

More info Yudi Zhang

Yudi Zhang

-

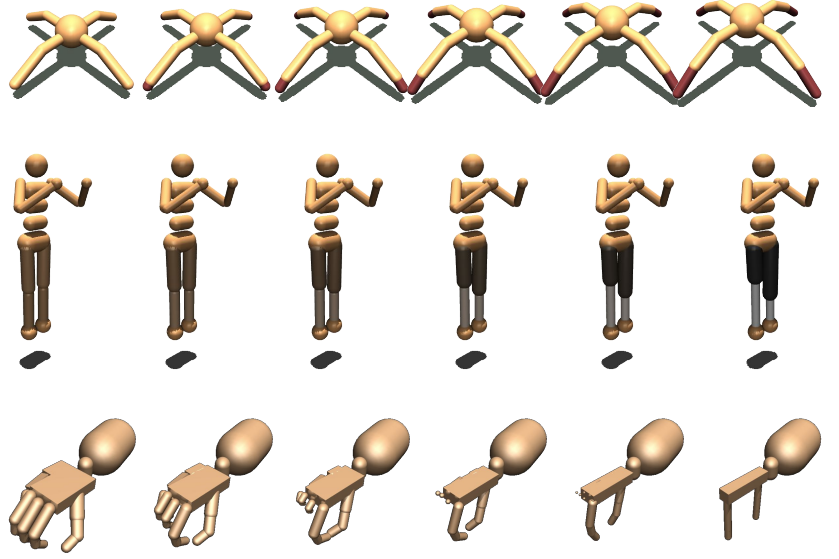

Transfer Learning for Robot-to-Robot AdaptationDec 2024

A popular paradigm in robotic learning is to train a policy from scratch for every new robot. This is not only inefficient but also often impractical for complex robots. The project revolves around the exploration and advancement of techniques for transferring policies between different …

Meng Fang

More info

Meng Fang

More info Tristan Tomilin

Tristan Tomilin

-

Sustainable Large Language ModelsDec 2024

Project description:Large Language Models (LLMs) are deep-learning models that achieve state-of-the-art performance in many NLP tasks. They typically consist of billions of weights. As a result, expressing weights in float32 leads to models of size at least 1GB. Such large models cannot be easily …

DHDalton HarmsenMore info Jakub Tomczak

Jakub Tomczak

-

Large Language Models with Knowledge GraphsDec 2024

Project description:Large Language Models (LLMs) are well-known for knowledge acquisition from large-scale corpus and for achieving SOTA performance on many NLP tasks. However, they can suffer from various issues, such as hallucinations, false references, made-up facts. On the other hand, Knowledge Graphs (KGs) can …

JLJasper LindersMore info Jakub Tomczak

Jakub Tomczak

-

Multi-Agent Reinforcement Learning for Cooperative TasksDec 2024

Multi-Agent Reinforcement Learning (MARL) is a field in artificial intelligence where multiple agents learn to make decisions in an environment through reinforcement learning. In the context of cooperative tasks, it involves agents working together to achieve common goals, sharing information and coordinating their actions …

LBLuka van den Boogaard Meng Fang

More info

Meng Fang

More info Tristan Tomilin

Tristan Tomilin

-

Large language Model Based Chatbots and their ApplicationsDec 2024

In recent years, large language models have revolutionized how machines understand and generate human-like text, offering profound implications for chatbot technology. This thesis proposes a deep exploration into the capabilities of these models within chatbot applications, aiming to enhance how they mimic human conversational …

Meng Fang

More info

Meng Fang

More info Jiaxu Zhao

Jiaxu Zhao

-

Model Transfer for Offline Reinforcement LearningNov 2024

Offline Reinforcement Learning (RL) deals with the problems where simulation or online interaction is impractical, costly, and/or dangerous, allowing to automate a wide range of applications from healthcare and education to finance and robotics. However, learning new policies from offline data suffers from distributional shifts …

More info Maryam Tavakol

Maryam Tavakol

-

Curriculum Learning for Constrained Reinforcement Learning in Contextual MDPsOct 2024

Sample complexity is one of the core challenges in reinforcement learning (RL)[1]. An RL agent often needs orders of magnitude more data than supervised learning methods to achieve a reasonable performance. This clashes with problems with safety requirements, where the agent should minimize the …

KTKelvin ToonenMore info Thiago Simão

Thiago Simão

-

CHIL-M: CHallenges in analyzing Incomplete Longitudinal Medical dataAug 2024

--- Subproject 1 has been filled. Subproject 2 is still open.In this project, we work together with the Dutch south-west Early Psoriatic Arthritis Registry (DEPAR) which is a collaboration of 15 medical centers in the Netherlands that aim to investigate which patient characteristics, measurements …

VTVictoria Tascau Rianne Schouten

More info

Rianne Schouten

More info Wouter Duivesteijn

Wouter Duivesteijn

-

Sustainable Diffusion ModelsAug 2024

Project description:Diffusion Models are deep-learning models that achieve state-of-the-art performance in many image synthesis tasks. They are typically parameterized with UNets and consist of billions of weights. Expressing their weights in float32 leads to models that cannot be easily deployed on edge devices (e.g., …

More info Jakub Tomczak

Jakub Tomczak

-

Deep Generative Models for (Conditional) Molecule GenerationAug 2024

Project description:Generative AI has become one of the leading approaches to (conditional) molecule generation. Like Large Language Models can learn (to some degree) rules governing natural language, could Large Chemistry Models learn rules governing atoms (quantum chemistry)? This is the leading research question of …

SDSidney DamenMore info Jakub Tomczak

Jakub Tomczak

-

Generative Object Detection Models for Mobile Robotics ApplicationsAug 2024

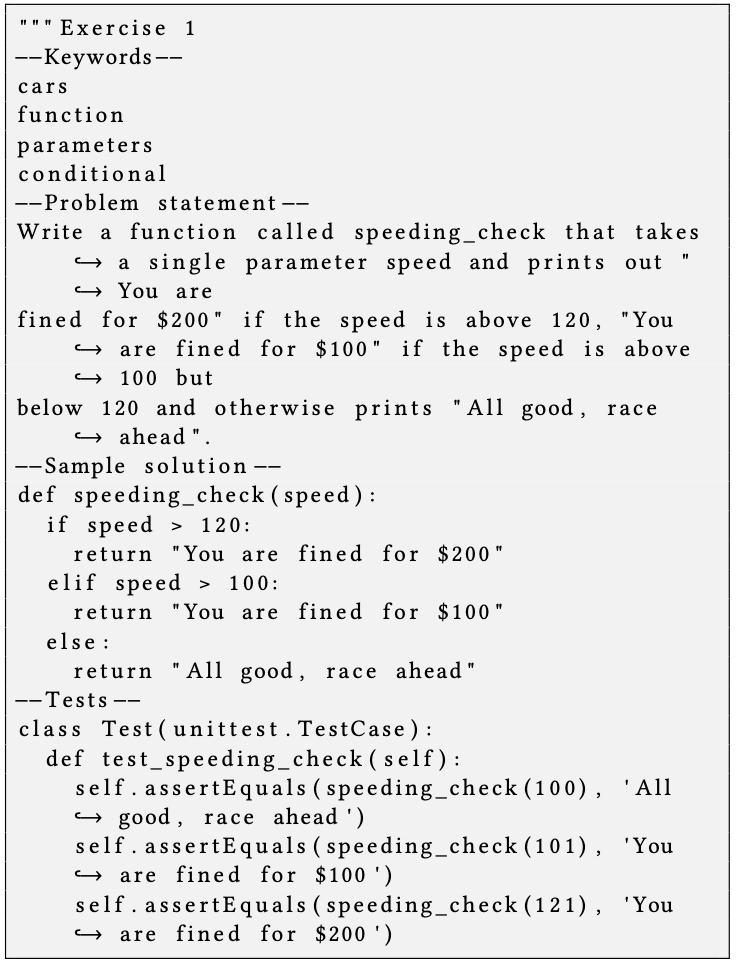

Project description:In the dynamic landscape of mobile robotics, object detection remains a foundational challenge, critical for enabling machines to interact intelligently with their surroundings. At Avular, a pioneering mobile robotics company in Eindhoven, we are excited to explore novel and innovative approaches in this …

DGDik van Genuchten Jakub Tomczak

More infoBKBart Keulen

Jakub Tomczak

More infoBKBart Keulen -

(closed) Understanding deep learning: linear vs non-linear models and their dynamics of learningJul 2024

This project is finished/closed.While deep learning has become extremely important in industry and society, neural networks are often considered ‘black boxes’, i.e., it is often believed that it is impossible to understand how neural networks really work. However, there are a lot of aspects …

More info Hannah Pinson

Hannah Pinson

-

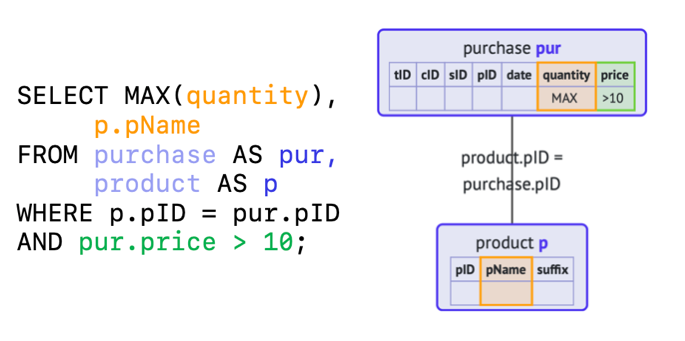

A feasibility study on automated database exercise generation with large language modelsJul 2024

Your lecturers here at the university spend a lot of time creating new exercises for our students, both for weekly assignments as for exams. If you extrapolate this to universities and professional training globally, this is a tremendous effort and use of time. It …

WAWillem Aerts George Fletcher

More info

George Fletcher

More info Daphne Miedema

Daphne Miedema

-

Analyzing progression of question difficulty for SQL questions on Stack OverflowJul 2024

SQL is difficult to use effectively, and creates many errors. Error types and frequency in SQL have been analyzed by various researchers, such as Ahadi, Prior, Behbood and Lister, and Taipalus and Siponen. One method of problem solving that computer scientists apply is posting …

BWBert Wijnhoven George Fletcher

More info

George Fletcher

More info Daphne Miedema

Daphne Miedema

-

On Neural Cellular AutomataJul 2024

The design of collective intelligence, i.e. the ability of a group of simple agents to collectively cooperate towards a unifying goal, is a growing area of machine learning research aimed at solving complex tasks through emergent computation [1, 2]. The interest in these techniques …

Erik Quaeghebeur

More info

Erik Quaeghebeur

More info Gennaro Gala

Gennaro Gala

-

Prompt Engineering for Large Language Models (taken)Dec 2023

GeneralAn internship at Accenture about prompt engineering for LLMs.RequirementsFrom our students we expect the following: high independence (including proposing own ideas);good understanding of mathematics (algebra, calculus, statistics, probability theory);good programming skills (Python + ML/DL libraries, preferably PyTorch). Thesis templatePlease take a look at this …

JBJoëlle BlinkMore info Jakub Tomczak

Jakub Tomczak

-

k-Nearest Neighbor Imputation Under Monotonicity ConstraintsNov 2023

See PDF

IVIko Vloothuis Wouter Duivesteijn

More info

Wouter Duivesteijn

More info Rianne Schouten

Rianne Schouten

-

Execution and Visual Representations of SQL queries in case of syntax errorsSep 2023

Query formulation in SQL is difficult for novices, and many errors are made in query formulation. Existing research has focused on registering error types and frequencies. Not much attention has been paid to solving these problems. One of the problems in SQL is with …

BWBas Witters George Fletcher

More info

George Fletcher

More info Daphne Miedema

Daphne Miedema

-

Efficient surrogates for the Ainslie wind turbine wake modelAug 2023

In wind farms, one source of reduction in power generation by the turbines is the reduction of wind speed in the wake downstream of each turbine's rotor. Namely, a turbine downstream in the wind direction of another will effectively experience wind with a reduced …

Erik Quaeghebeur

More infoLBLaurens Bliek (Information Systems, IE&IS)

Erik Quaeghebeur

More infoLBLaurens Bliek (Information Systems, IE&IS) -

Object measurement by using computer vision (at a company)Aug 2023

Company: Datacation / aerovision.aiLocation: Eindhoven (AI Innovation Center at High Tech Campus) or Amsterdam (VU)Project descriptionAerovision.ai is a start-up that is building a no-code A.I. platform for drone companies. With this A.I. platform, companies can train, deploy and evaluate their customized computer vision algorithms, …

Jakub Tomczak

More infoACAt the company

Jakub Tomczak

More infoACAt the company -

Multivariate correlations for data cleaningJul 2023

Correlations are extensively used in all data-intensive disciplines, to identify relations between the data (e.g., relations between stocks, or between medical conditions and genetic factors). The 'industry-standard' correlations are pairwise correlations, i.e., correlations between two variables. Multivariate correlations are correlations between three or more variables. …

More info Odysseas Papapetrou

Odysseas Papapetrou

-

Correlation Detective on streaming dataJul 2023

Correlations are extensively used in all data-intensive disciplines, to identify relations between the data (e.g., relations between stocks, or between medical conditions and genetic factors). The 'industry-standard' correlations are pairwise correlations, i.e., correlations between two variables. Multivariate correlations are correlations between three or more variables. …

More info Odysseas Papapetrou

Odysseas Papapetrou

-

Efficient Granger causalityJul 2023

Granger causality is among the standard functions for quantifying causal relationships between time series (e.g., closing prices of stocks). However, naïve computation of Granger causality requires pairwise comparisons between all time series, which comes with quadradic complexity. In this project you will focus on …

More info Odysseas Papapetrou

Odysseas Papapetrou

-

(self-defined project on model-centric SD)Jul 2023

(irrelevant for self-defined project)

NSNiels SchellemanMore info Wouter Duivesteijn

Wouter Duivesteijn

-

(self-proposed project on time-dependent SD)Jul 2023

(irrelevant)

BEBart EngelenMore info Wouter Duivesteijn

Wouter Duivesteijn

-

Identifying robust instances in classificationJul 2023

In a classification task, some instances are classified more robustly than others. Namely, even with a large modification of the training set, these instances (in the test set) will be assigned to the same class. Other instances are non-robust in the sense that a …

More info Erik Quaeghebeur

Erik Quaeghebeur