Project: Dual arm manipulation of heavy objects with humanoid robots via reinforcement learning

Description

Motivation.

Reinforcement Learning(RL; Sutton and Barto 2018) has achieved successful outcomes in multiple applications, including robotics(Kober, Bagnell, and Peters 2013). A key challenge to deploying RL in such a scenario is to ensure the agent is robust so it does not lose performance even if the environment’s geometry and dynamics undergo small changes.

Challenge.

In manipulation tasks with humanoid robots, it is not yet clear how to develop effective RL approaches for systems with a large number of degrees of freedom, such as full humanoids ( 35−50 DoFs) or even upper-body humanoids (20 DoFs, with simple grippers ). In this project, we are particularly interested in dual arm manipulation tasks, such as lifting or pivoting heavy objects. This has potential applications in warehouse and airport automation, a topic TU/e is actively investigating.

Existing Methods.

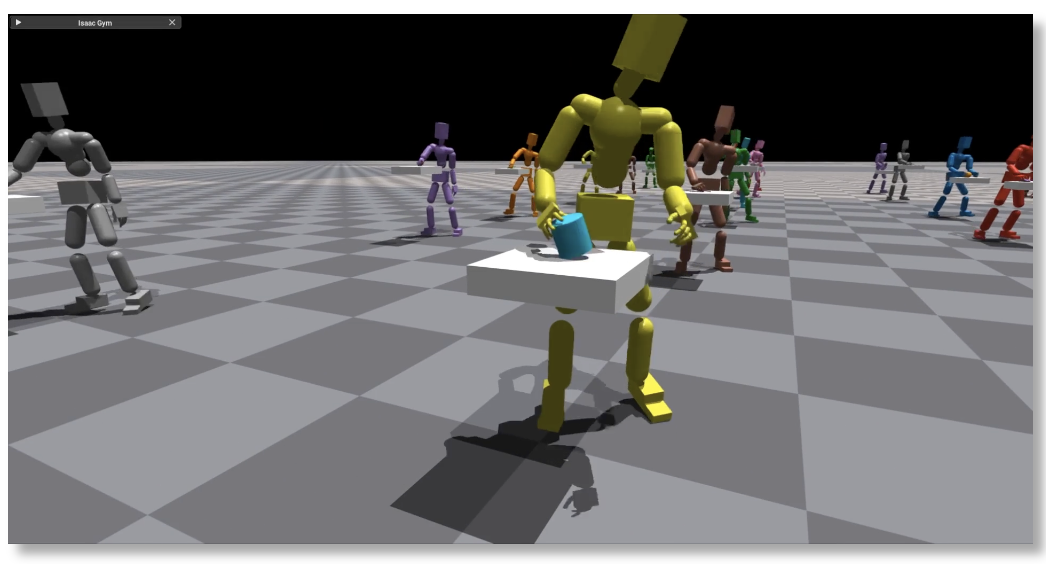

In a very recent work (Luo et al. 2024), it has been shown that combining a human motion database with a human movement latent space learned from data can effectively be used in place of joint angle to explore the space of grasping motion, obtaining effecting and naturally looking motions. This has been demonstrated so far in simulation for humanoid robots provided with hands with fingers. The figure by (Luo et al. 2024) shows an impact-aware control used to grab heavy boxes. Check the paper’s page and videos for more details.

Goal.

In this project, we investigate how to train RL agents for the aforementioned high-degrees-of-freedom and contact-rich tasks. A starting point to find relevant literature and understand the current challenges and efforts of the machine learning and robotics communities in this direction is provided by (Luo et al. 2024) and references therein.

Main Tasks.

Perform literature review on the topic (both in the reinforcement learning and robot control literature)

select example scenarios

set up software infrastructure for training (expected NVDIA Isaac Gym)

tailor/develop new reinforcement learning approaches for dual arm humanoid manipulation

perform numerical simulation (combination of own implementation and open source software, depending on the complexity of the selected scenarios) to demonstrate the newly develop approach(es)

Prerequisites.

appropriate background probability theory, mechanics, machine learning and and strong interest in developing it further in the context of robotics

suitable programming experience (Python, MATLAB, C/C++, …), potentially also with ML frameworks (e.g., PyTorch, TensorFlow, …)

Supervision Team

| Daily supervisor | Bram Grooten |

| External supervisor |

Alessandro Saccon from the Mechanical Engineering Department |

References

- Kober, Jens, J. Andrew Bagnell, and Jan Peters. 2013. “Reinforcement Learning in Robotics: A Survey.” Int. J. Robotics Res. 32 (11): 1238–74.

- Luo, Zhengyi, Jinkun Cao, Sammy Christen, Alexander Winkler, Kris Kitani, and Weipeng Xu. 2024. “Grasping Diverse Objects with Simulated Humanoids.” 2407.11385.

- Sutton, Richard S., and Andrew G. Barto. 2018. Reinforcement Learning: An Introduction. 2nd ed. MIT press.

Details

- Supervisor

-

Bram Grooten

Bram Grooten

- Secondary supervisor

-

Thiago Simão

Thiago Simão

- External location

- Mechanical Engineering Department