Project: Multi-Agent Reinforcement Learning for Cooperative Tasks

Description

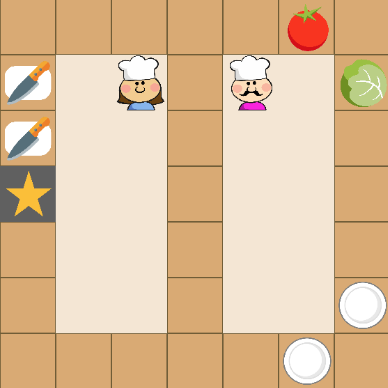

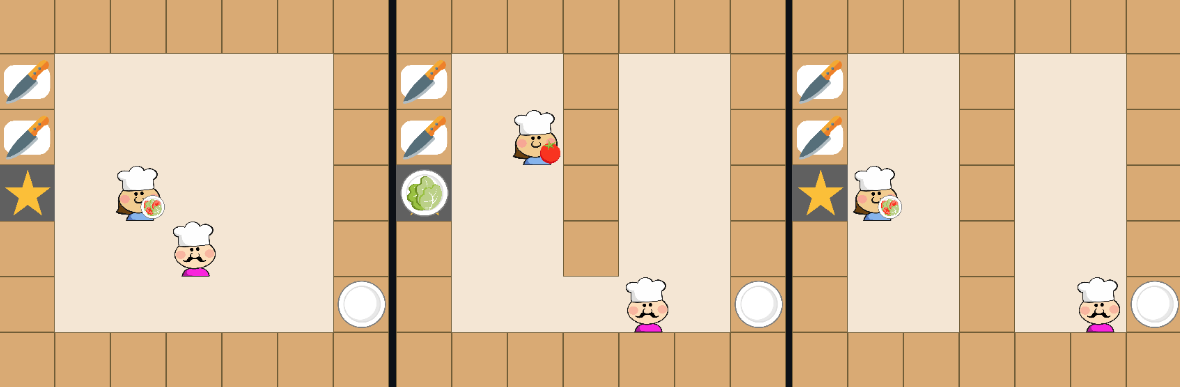

Multi-Agent Reinforcement Learning (MARL) is a field in artificial intelligence where multiple agents learn to make decisions in an environment through reinforcement learning. In the context of cooperative tasks, it involves agents working together to achieve common goals, sharing information and coordinating their actions to maximize overall performance. Improving AI agents in MARL lies in the complexity of real-world scenarios where multiple agents need to collaborate. By enhancing the capabilities of these agents, we aim to create more efficient and adaptable systems for applications like autonomous vehicles, smart grids, and robotic teams. Ultimately, the goal is to enable AI agents to navigate dynamic environments, learn from each other, and collectively accomplish tasks that are challenging for individual agents to handle alone. The aim is to analyse existing and develop novel effective and efficient cooperative MARL methodologies, focusing on problem scenarios within simulation environments, such as the physics-based MuJoCo robotics suite or a simplified version of the Overcooked game.

Objectives

Algorithmic Advancements: Investigate and develop novel MARL algorithms that are specifically tailored for cooperative tasks. Explore techniques that enhance coordination and cooperation between agents, leading to more effective task completion and improved cooperation dynamics.

Analysis of Existing Methods: Conduct a comprehensive analysis of existing MARL algorithms in cooperative settings. Identify the strengths and weaknesses of current approaches, providing insights into their limitations and areas for improvement.

Benchmark and Environment Development: Create new cooperative environments or benchmarks that can serve as testbeds for evaluating MARL algorithms in cooperative scenarios. These environments should offer a range of challenges and complexities to assess an algorithm's ability to handle various cooperative tasks.

Efficient Communication: Investigate communication and information-sharing strategies among agents to enhance their ability to collaborate effectively. Explore methods for efficient exchange of information in cooperative multi-agent systems.

Outcomes:

This project aims to contribute to the advancement of MARL in cooperative settings, enhancing the capabilities of agents to effectively collaborate in solving complex tasks. The research findings may have implications for various domains, including robotics, automation, and multi-agent systems.

Reading:

Youngwoon Lee, Jingyun Yang, Joseph J. Lim. Learning to Coordinate Manipulation Skills via Skill Behavior Diversification. ICLR 2020

Wu, S. A., Wang, R. E., Evans, J. A., Tenenbaum, J. B., Parkes, D. C., Kleiman‐Weiner, M. Too many cooks: Bayesian inference for coordinating multi-agent collaboration. Topics in Cognitive Science 2021

Details

- Student

-

LBLuka van den Boogaard

- Supervisor

-

Meng Fang

Meng Fang

- Secondary supervisor

-

Tristan Tomilin

Tristan Tomilin