Project: Evaluating Explanations of Model Predictions

Description

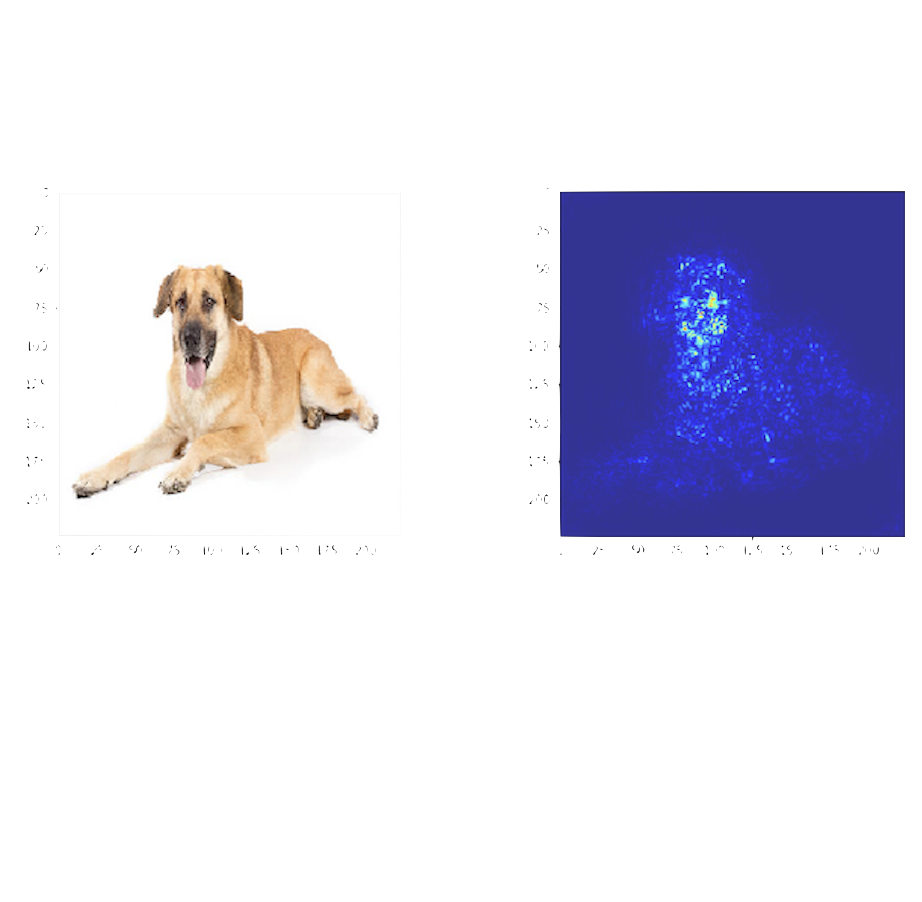

The black-box nature of neural networks prohibits their application in impactful areas, such as health care or generally anything that would have consequences in the real world. In response to this, the field of Explainable AI (XAI) emerged. State-of-the-art methods in XAI define a hypothesis that defines the features in the input space, e.g., pixels in an image, that are relevant for the class-prediction.

However, a big problem with these methods is that we don't know how to test these hypotheses. A straightforward approach would be to remove the "background" of the image, where the background is defined as the pixels that are deemed irrelevant. For example, we could set the "background" to black and look if the classifier would still predict the same class, based only on the hypothetically relevant pixels. Yet, the problem is that we change the image when setting the background black. This way, we can not answer the question whether a given image is classified in a certain way (mainly) due to the setting of the identified pixels. We can only answer a different question - whether other images with the setting of identified pixels are also classified in the same way. For example, images where the background is black are often classified as "monitor", which is not completely unreasonable. Thus, the question of a sensible evaluation arises.

This master thesis is for students that are inherently curious and creative thinkers. To understand the principles of explanation methods and how they connect to the workings of neural networks, a stronger mathematical background is also desirable.

Details

- Supervisor

-

Sibylle Hess

Sibylle Hess

- Secondary supervisor

-

WMWil Michiels

- Interested?

- Get in contact