Project: Reinforcement Learning for Contact-rich and Impact-aware Robotic Tasks

Description

Reinforcement Learning (RL) [6] has achieved successful outcomes in multiple applications, including robotics [1]. A key challenge to deploying RL in such a scenario is to ensure the agent is robust so it does not lose performance even if the environment's geometry and dynamics undergo small changes.

Challenge

In manipulation tasks, the RL agent must deal with several contacts and, in dynamic manipulation, also with intentional impacts. In this setting, the combined agent-environment dynamics is highly nonlinear, actually nonsmooth due to the presence of dry friction and switching contacts. It is still unclear how to best design RL agents to cope with these nonsmooth conditions and how to ensure they are robust to small environment changes and perception errors.

Existing Methods

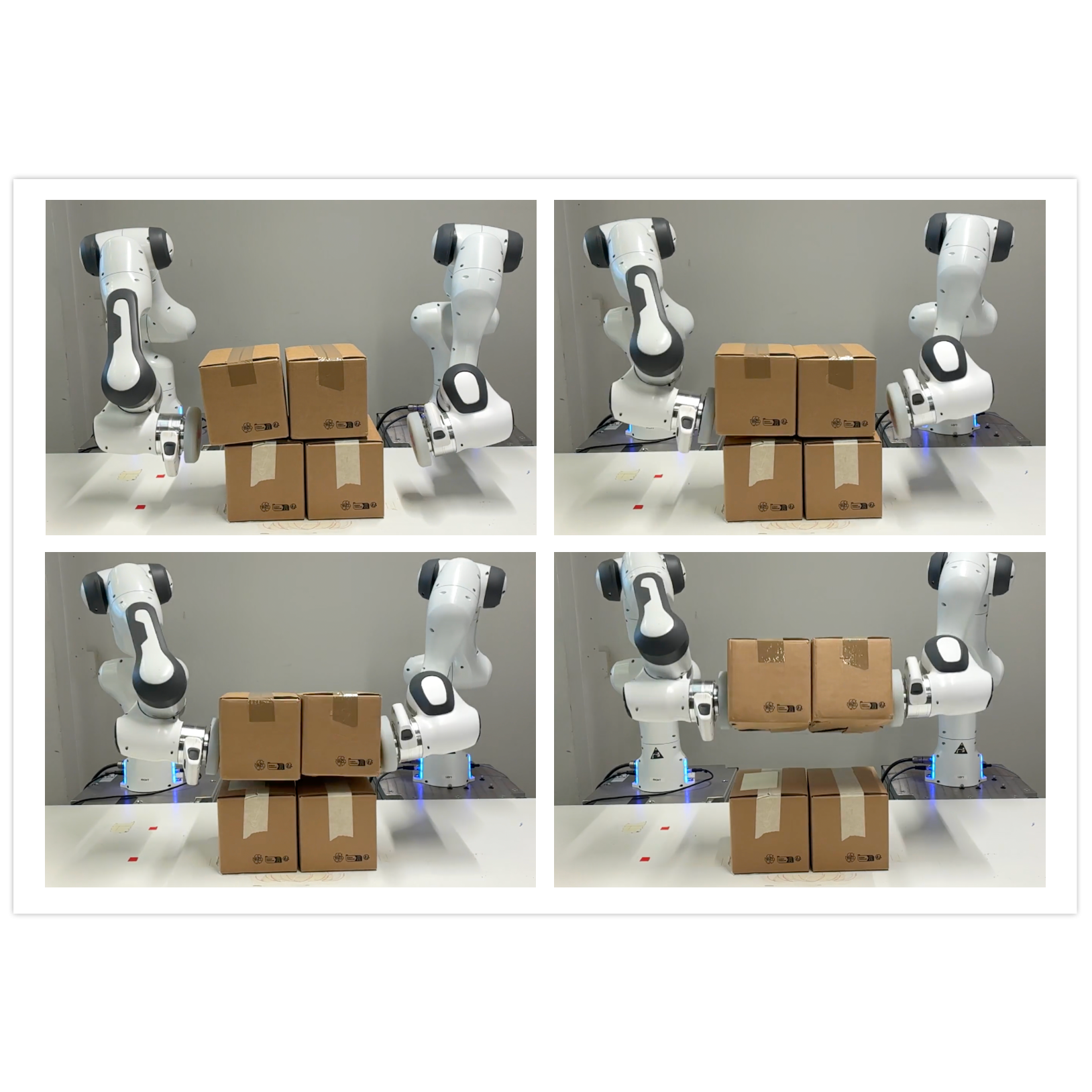

On the one hand, some methods, such as *adversarial training*, have managed to increase the robustness of RL agents to small changes in the environment. On the other hand, via model-based impact-aware robot control, it is possible to mitigate the effect of uncertainties in contact location by switching at contact time between overlapping policies [4] that are in accordance with the underlying impact dynamics. The attached figure illustrate the application of this technique on one of the robot setups of the TU/e.

Goal

Considering the current challenges and efforts of the robotics community in this direction [5], in this project, we investigate how to train RL agents for contact-rich and impact-aware tasks, such as grasping [2]. One interesting starting point could be understanding how to merge the reference spreading approach [6] with classical policy gradient methods [3].

Main Tasks

- Perform literature review on the topic (both in the reinforcement learning and robot control literature)

select example scenarios. - Develop new impact-aware reinforcement learning theory.

- Perform numerical simulation (own implementation and/or open source software, depending on the complexity of the selected scenarios) to demonstrate the newly develop approach(es).

Prerequisites

- Appropriate background probability theory, mechanics, machine learning and and strong interest in developing it further in the context of robotics.

- Suitable programming experience (Python, MATLAB, C/C++, ...), potentially also with ML frameworks (e.g.,PyTorch, TensorFlow, ...).

Supervision Team

| Daily supervisor | Bram Grooten |

| External supervisor |

Alessandro Saccon from the Mechanical Engineering Department |

References

- J. Kober, J. A. Bagnell, and J. Peters. Reinforcement learning in robotics: A survey. Int. J. Robotics Res., 32(11):1238–1274, 2013.

- Luo, Z., Cao, J., Christen, S., Winkler, A., Kitani, K., and Xu, W. (2024). Grasping diverse objects with simulated humanoids. 2407.11385.

- J. Peters and S. Schaal. Policy gradient methods for robotics. In International Conference on Intelligent Robots and Systems (IROS), pages 2219–2225. IEEE, 2006.

- A. Saccon, N. van de Wouw, and H. Nijmeijer. Sensitivity analysis of hybrid systems with state jumps with application to trajectory tracking. In CDC, pages 3065–3070. IEEE, 2014.

- H. J. Suh, M. Simchowitz, K. Zhang, and R. Tedrake. Do Differentiable Simulators Give Better Policy Gradients? In International Conference on Machine Learning, pages 162:20668–20696, 2022.

- R. S. Sutton and A. G. Barto. Reinforcement Learning: An Introduction. MIT press, 2 edition, 2018.

- J. van Steen, G. van den Brandt, N. van de Wouw, J. Kober, and A. Saccon. Quadratic programming-based reference spreading control for dual-arm robotic manipulation with planned simultaneous impacts. Transactions of Robotics, 2024.

Details

- Supervisor

-

Bram Grooten

Bram Grooten

- Secondary supervisor

-

Thiago Simão

Thiago Simão

- External location

- Mechanical Engineering Department