Project: Deep Clustering: Simultaneous Optimization of Representations and Clustering

Description

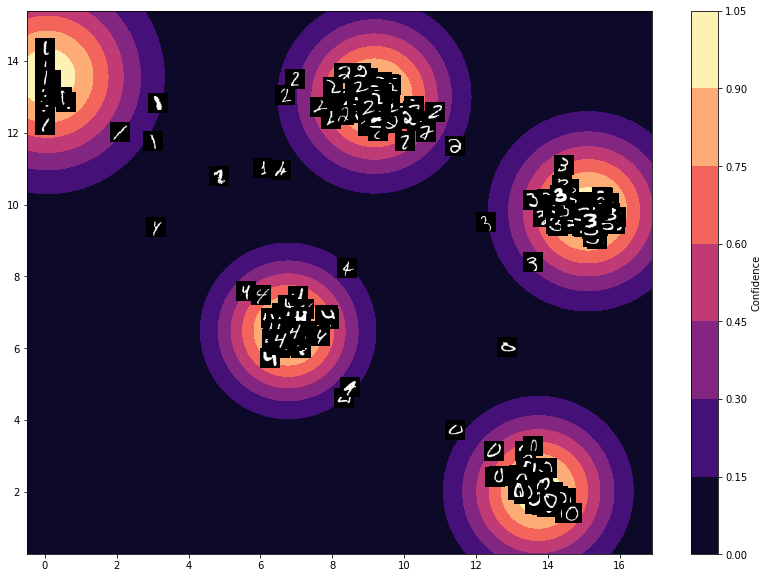

Deep clustering is a well-researched field with promising approaches. Traditional nonconvex clustering methods require the definition of a kernel matrix, whose parameters vastly influence the result, and are hence difficult to specify. In turn, the promise of deep clustering is that a feature transformation can be learned automatically, based on the data, such that points in the transformed feature space are easy to cluster.

However, the combinatorial optimization of clusters is difficult to combine with the numeric optimization approaches of deep neural networks, or autoencoders. Hence, most deep clustering approaches rely on a two-step procedure: the latent space is learned first and then the clustering is computed on this latent space[1,2]. There are few approaches to optimize the clustering simultaneously with the representation[3]. In this project, you would develop a novel deep clustering method that combines the recently proposed SGD optimization of clusters[4] with the SGD optimization of the learned representations by, e.g., an autoencoder.

This project is for (rather) mathematically inclined students that have an affinity with matrix factorization and representation learning. Coding skills/interests (Pytorch) will also be needed.

[1] Schnellbach, Janik and Márton Kajó. “Clustering with Deep Neural Networks – An Overview of Recent Methods.” (2020).

[2] Nutakki, Gopi Chand et al. “An Introduction to Deep Clustering.” Clustering Methods for Big Data Analytics (2018):

[3] Tian, Kai et al. “DeepCluster: A General Clustering Framework Based on Deep Learning.” ECML/PKDD (2017).

[4] Hess, Sibylle et al. “BROCCOLI: overlapping and outlier-robust biclustering through proximal stochastic gradient descent.” Data Min. Knowl. Discov. 35 (2021): 2542-2576.

Details

- Supervisor

-

Sibylle Hess

Sibylle Hess

- Interested?

- Get in contact