Project: Safe reinforcement learning with decision transformers

Description

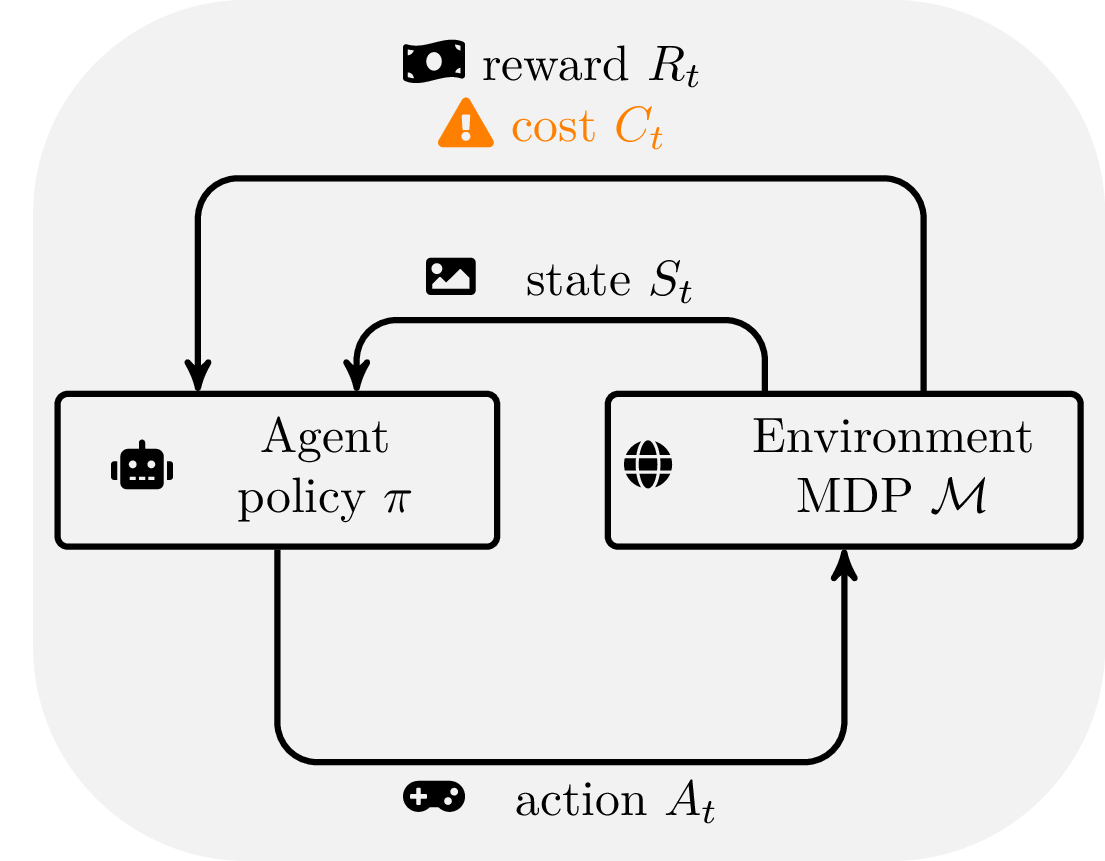

Safety is a core challenge for the deployment of reinforcement learning (RL) in real-world applications [1]. In applications such as recommender systems, this means the agent should respect budget constraints [2]. In this case, the RL agent must compute a policy condition of the available budget that is only revealed at the deployment time.

In recommender systems, it is common to have a static dataset with ratings from users on certain items. This lead to the application of offline RL algorithms [3,4], which learn without direct interactions with the environment. However, it is still an open question how to ensure these algorithms comply with the budget constraints.

Recent developments have proposed to compute new policies for offline RL using behavior cloning (BC) techniques [5,6,7]. The central insight is to condition the policy on the desired performance. This project aims to use the same principles to develop algorithms for RL with budget constraints, where the budget is only revealed at deployment time. In this case, the agent should optimize its performance while only consuming the available budget.

References

Dulac-Arnold, G., Levine, N., Mankowitz, D. J., Li, J., Paduraru, C., Gowal, S., and Hester, T. (2021). Challenges of real-world reinforcement learning: definitions, benchmarks and analysis. Machine Learning, 110(9):2419–2468.

Boutilier, C. and Lu, T. (2016). Budget allocation using weakly coupled, constrained Markov decision processes. In UAI, pages 52–61. AUAI Press

Singh, A., Halpern, Y., Thain, N., Christakopoulou, K., Chi, H., Chen, J., and Beutel, A. (2020). Building Healthy Recommendation Sequences for Everyone: A Safe Reinforcement Learning Approach. FAccTRec Workshop at ACM RecSyS

Afsar, M. M., Crump, T., and Far, B. H. (2023). Reinforcement learning based recommender systems: A survey. ACM Comput. Surv., 55(7):145:1–145:38

Chen, L., Lu, K., Rajeswaran, A., Lee, K., Grover, A., Laskin, M., Abbeel, P., Srinivas, A., and Mordatch, I. (2021). Decision transformer: Reinforcement learning via sequence modeling. In NeurIPS, pages 15084–15097.

Emmons, S., Eysenbach, B., Kostrikov, I., and Levine, S. (2022). RvS: What is essential for offline RL via supervised learning? In ICLR. OpenReview.net.

Ajay, A., Du, Y., Gupta, A., Tenenbaum, J. B., Jaakkola, T. S., and Agrawal, P. (2023). Is conditional generative modeling all you need for decision making? In ICLR. OpenReview.net.

Details

- Supervisor

-

Thiago Simão

Thiago Simão

- Interested?

- Get in contact