Project: Procedural 3D Environment Generation for Image-Based Reinforcement Learning

Description

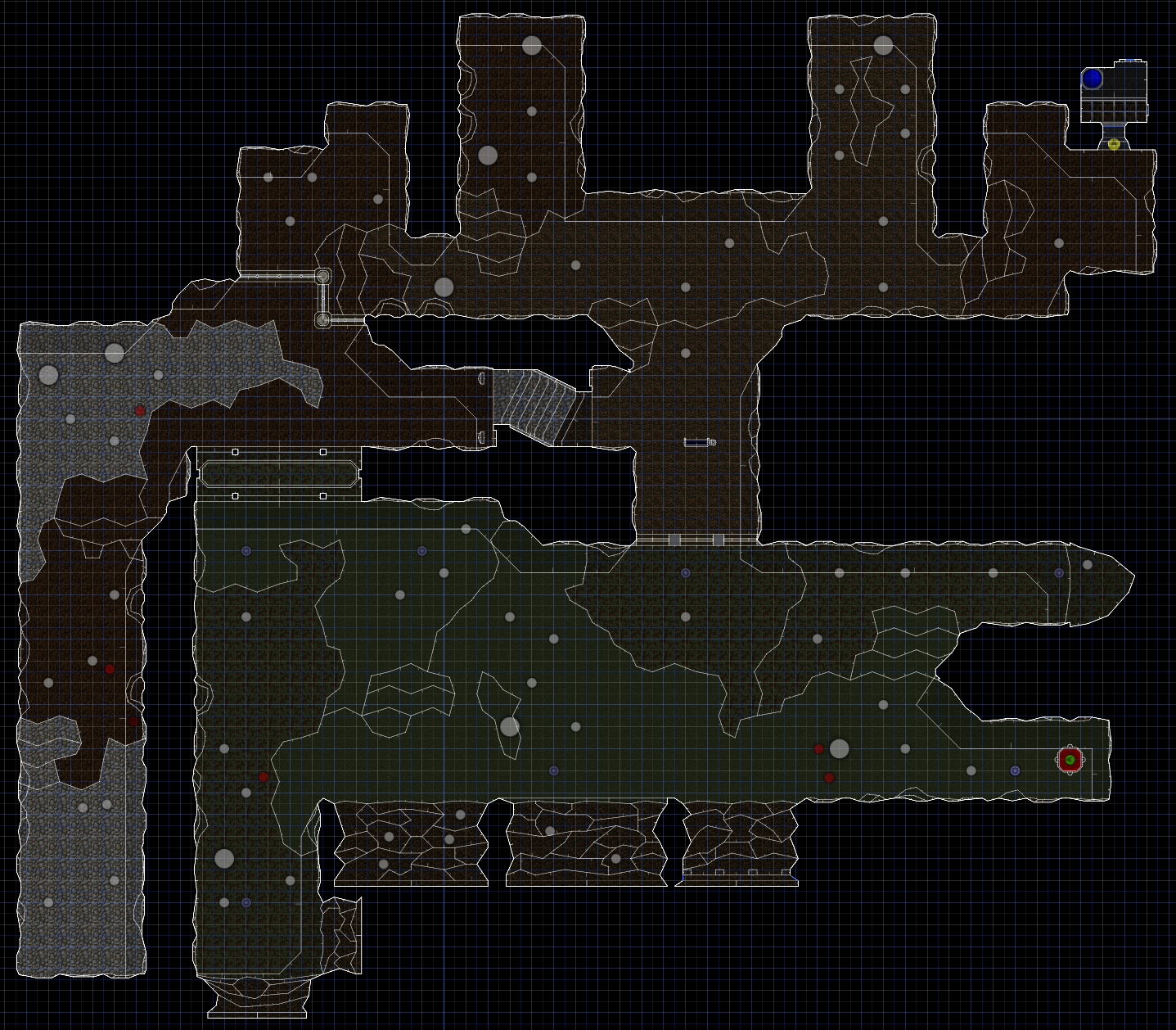

As autonomous systems evolve, static simulation environments for training reinforcement learning agents increasingly fail to prepare algorithms for real-world variability. Procedural content generation (PCG) [5] in 3D environments offers a low-cost solution to automatically creating a near-infinite variety of dynamic training scenarios. This has the potential to enhance the adaptability and generalization of RL agents.

Challenge

Efficient 3D simulation environments are typically powered by complex engines and developed using specialized software. The map layout, placement of objects and entities, and dynamic behaviors within these environments generally require manual programming. Fully automating this process through PCG is non-trivial. Creating environments on-the-fly often encounters pitfalls such as generating unstructured layouts or unsolvable tasks, or failing to adjust the difficulty level in response to the agent's learning progress [4].

Existing Methods

PCG has widely been used in 2D gridworld environments [1, 2] to assess the generalization capabilities of RL agents. While effective, these applications lack complexity and realism. In contrast, 3D platforms like Minecraft, DeepMind Lab, and AI2-THOR [3] have also adopted PCG to enhance RL training environments. However, these platforms are slow to simulate without effective parallelization, and they do not directly support the automatic adaptation of environment generation during training.

Goal

The goal is to develop a method for creating novel environments on-demand across a variety of scenarios. This method aims to enhance training protocols to produce more generalizable agents by crafting environments specifically designed to maximize the agents' learning potential. Recent advancements in LLMs open up possibilities for generating environments directly from text descriptions. If the environments can be represented as textual files, it will enable the use of LLMs to dynamically generate intricate training scenarios, potentially advancing the flexibility and effectiveness of agent training.

Main Tasks

Literature Review: Study existing PCG techniques, and the basics of LLMs and image-based RL.

Environment Development: Develop a PCG method capable of producing diverse and complex 3D environments.

Framework Integration: Incorporate environment generation into an efficient training framework, such as Sample-Factory or JAXRL.

Empirical Evaluation: Assess the effectiveness of these environments in enhancing the robustness of RL agents.

Thesis Documentation: Document the research process, development details, and experimental findings.

References

[1] Earle, Sam, Zehua Jiang, and Julian Togelius. "PCGRL+: Scaling, Control and Generalization in Reinforcement Learning Level Generators." arXiv preprint arXiv:2408.12525 (2024).

[2] Cobbe, Karl, et al. "Leveraging procedural generation to benchmark reinforcement learning." International conference on machine learning. PMLR, 2020.

[3] Kolve, Eric, et al. "Ai2-thor: An interactive 3d environment for visual ai." arXiv preprint arXiv:1712.05474 (2017).

[4] Dennis, Michael, et al. "Emergent complexity and zero-shot transfer via unsupervised environment design." Advances in neural information processing systems 33 (2020): 13049-13061.

[5] Shaker, Noor, Julian Togelius, and Mark J. Nelson. "Procedural content generation in games." (2016): 978-3.

Details

- Supervisor

-

Tristan Tomilin

Tristan Tomilin

- Secondary supervisor

-

Meng Fang

Meng Fang

- Interested?

- Get in contact