Project: High-performance Safe RL Benchmarking

Description

As AI systems become increasingly integral to critical sectors, ensuring their safety and reliability is essential. Reinforcement Learning (RL) is a prominent method that learns optimal behaviors through trial-and-error interactions with a dynamic environment. Yet, the stakes are high: in physical settings, a wrong move can result in serious damage or even catastrophic failures. Training agents in simulation bypasses safety concerns associated with real-world experimentation. Consequently, the availability of meaningful simulation environments becomes important for safe RL research.

Challenge

A notable barrier in Safe RL research is the lack of realistic and computationally efficient simulation environments. Complex tasks with accurate physics modeling consume extensive resources, leading to increased development and training costs for novel methods. This constraint not only increases the time and budget required but also limits the practical applicability for real-world scenarios.

Existing Methods

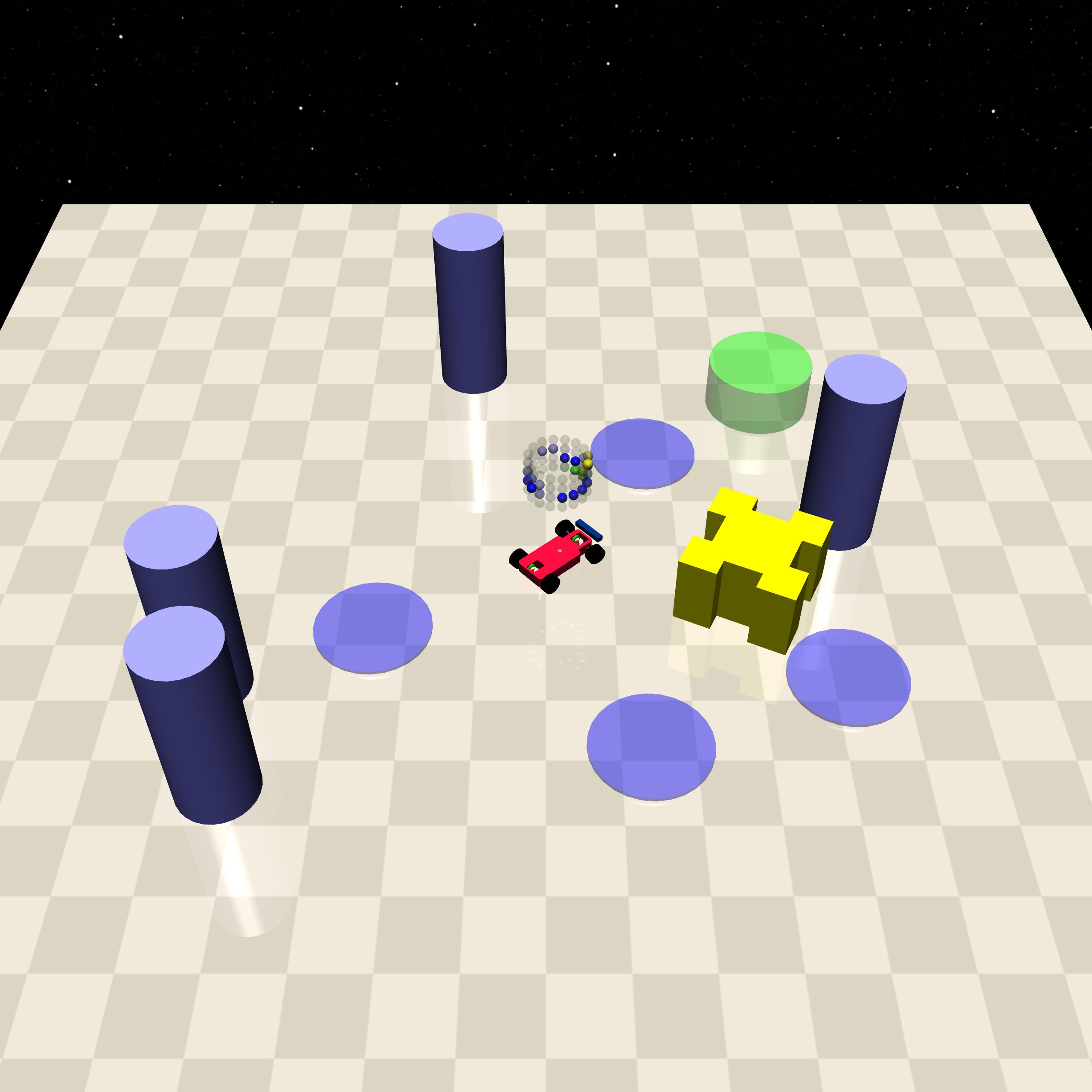

Environments such as the AI Safety Gridworlds (Leike et al. 2017) focus on simple toy problems that are computationally light but lack complexity and realism. Conversely, more dynamic environments like OpenAI’s Safety-Gymnasium (Ji et al. 2023) feature 3D navigation scenarios with physics-based collisions (illustrated in the figure). However, these come at the cost of higher computational demands. This trade-off complicates the development and testing of algorithms intended for practical, real-world applications, where both realism and computational efficiency are important.

Goal

This project aims to extend the high-performance Brax (Freeman et al. 2021) physics simulation environment by incorporating tasks and environments from Safety-Gymnasium, designed specifically to benchmark safety in RL. This integration will allow for the simulation of more diverse and complex scenarios, thereby facilitating more comprehensive robotic continuous control problems.

Main Tasks

Conduct a comprehensive review of existing Safe RL environments and their limitations.

Integrate Safety-Gymnasium tasks into Brax and expand to more complex scenarios.

Implement popular safe RL algorithms in JAX and evaluate their effectiveness.

Experimentally analyze the usefulness and imposed complexity of the new environments.

Document the integration process, experimental results, and conclusions in a thesis.

References

Freeman, C Daniel, Erik Frey, Anton Raichuk, Sertan Girgin, Igor Mordatch, and Olivier Bachem. 2021. “Brax–a Differentiable Physics Engine for Large Scale Rigid Body Simulation.” arXiv Preprint arXiv:2106.13281.

Ji, Jiaming, Borong Zhang, Jiayi Zhou, Xuehai Pan, Weidong Huang, Ruiyang Sun, Yiran Geng, Yifan Zhong, Josef Dai, and Yaodong Yang. 2023. “Safety Gymnasium: A Unified Safe Reinforcement Learning Benchmark.” Advances in Neural Information Processing Systems 36.

Leike, Jan, Miljan Martic, Victoria Krakovna, Pedro A Ortega, Tom Everitt, Andrew Lefrancq, Laurent Orseau, and Shane Legg. 2017. “AI Safety Gridworlds.” arXiv Preprint arXiv:1711.09883.

Details

- Student

-

MBMourad Boustani

- Supervisor

-

Tristan Tomilin

Tristan Tomilin

- Secondary supervisor

-

Thiago Simão

Thiago Simão