Project: Robust, auto-incremental learning

Description

Incremental learning techniques can solve one task after the next without starting from scratch, every time starting from the model learned on the previous task. A current limitation is that these techniques have hyperparameters, controlling for instance how fast the model can adapt to new tasks or forgets the previous tasks. These are usually kept constant across a stream of tasks. This assumes that regardless of the set of learning tasks, the model will always require a fixed amount of classification and anti-forgetting objectives.

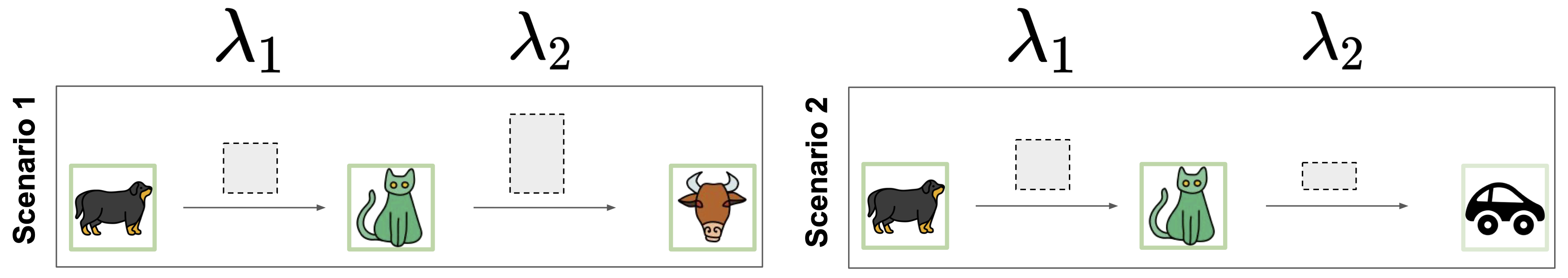

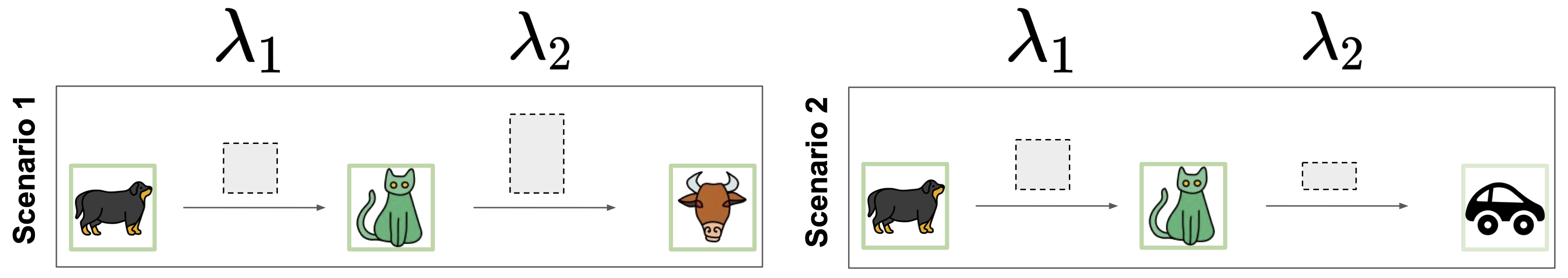

Such an assumption is unrealistic, consider the figure. Here, we depict two scenarios, where the learner observes either cows or cars, after learning tasks of dogs and cats. In the first scenario, the model already is exposed to semantically similar (animal) categories. As a result, incremental learner may not need to learn everything from scratch, hence downgrading the importance of the classification loss. Contrary to that, to learn about a completely distinct concept of car, it may be viable to anti-forget less and learn more.

Automated Machine Learning (AutoML) can predict the magnitude of anti-forgetting per-task via Hyperparameter Optimization (HPO) (Yu and Zhu, 2020). In HPO, the goal is to predict the hyperparameters automatically, with little to no human involvement. Two prominent techniques include either Bayesian optimization (Snoek et al., 2012) or gradient-based techniques (Baydin et al., 2018). The application of these in incremental learning to adapt to each new task effectively is still little understood, but very promising to yield new state of the art results.

Reading:

Yu, T. and Zhu, H. (2020). Hyper-parameter optimization: A review of algorithms and applications. arXiv preprint arXiv:2003.05689.

Baydin, A. G., Pearlmutter, B. A., Radul, A. A., and Siskind, J. M. (2018). Automatic differentiation in machine learning: a survey. JMLR.

Snoek, J., Larochelle, H., and Adams, R. P. (2012). Practical bayesian optimization of machine learning algorithms. NeurIPS 2012, 25.

Gok, E. C., Yildirim, M. O., Kilickaya, M., and Vanschoren, J. (2023). Adaptive regularization for class- incremental learning. AutoML Conference 2023.

Details

- Supervisor

-

Joaquin Vanschoren

Joaquin Vanschoren

- Interested?

- Get in contact